Evaluating Generative Adversarial Networks (GANs)

Domino2019-12-18 | 6 min read

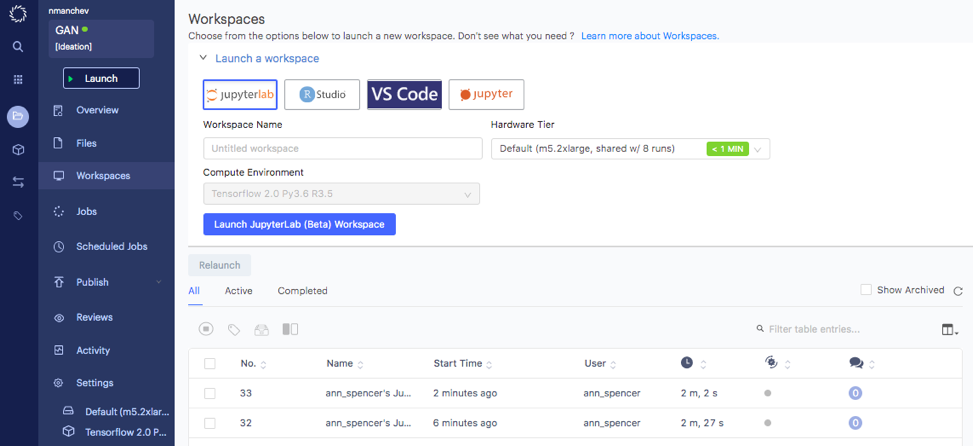

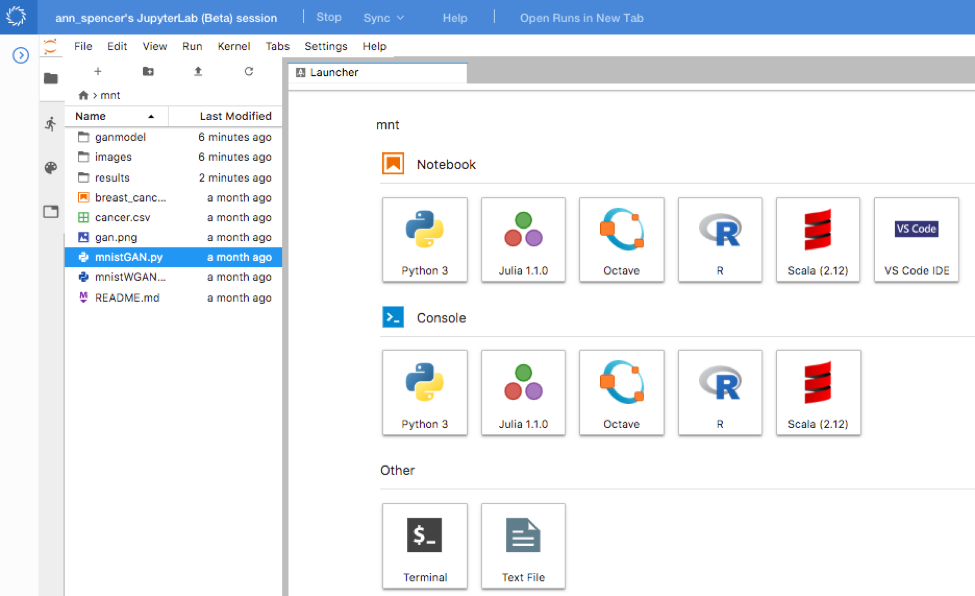

This article provides concise insights into GANs to help data scientists and researchers assess whether to investigate GANs further. If you are interested in a tutorial as well as hands-on code examples within a Domino project, then consider attending the upcoming webinar, “Generative Adversarial Networks: A Distilled Tutorial”.

Introduction

With growing mainstream attention on deepfakes, Generative Adversarial Networks (GANs) have also entered the mainstream spotlight. Unsurprisingly, this mainstream attention may also lead to data scientists and researchers fielding questions about, or assessing, whether to leverage GANs in their own workflows. While Domino offers a platform where industry is able to leverage their choice of languages, tools and infra to support model-driven workflows, we are also committed to supporting data scientists and researchers in assessing whether a framework, like GANs, will help accelerate their work. This blog post provides high-level insights into GANs. If you are interested in a tutorial as well as hands-on code examples within a Domino project, then consider attending the upcoming webinar, “Generative Adversarial Networks: A Distilled Tutorial" presented by Nikolay Manchev, Domino’s Principal Data Scientist for EMEA. Both the Domino project

and webinar provide more depth than this blog post as they cover an implementation of a basic GAN model and demonstrate how adversarial networks can be used to generate training samples.

Why GANs? Consider discriminative versus generative

Questions regarding the pros and cons of discriminative versus generative classifiers have been argued for years. Discriminative models leverage observed data and capture the conditional probability given an observation. Logistic regression, from a statistical perspective, is an example of a discriminative approach. Back-propagation algorithm or backprop, from a deep learning perspective, is an example of a successful discriminative approach.

Generative differs as it leverages joint probability distribution. The generative model learns the distribution of the data and provides insight into how likely a given example is. Yet, in the paper, “Generative Adversarial Nets,” Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville and Yoshua Bengio argued that

“Deep generative models have had less of an impact [than discriminative], due to the difficulty of approximating many intractable probabilistic computations that arise in maximum likelihood estimation and related strategies, and due to difficulty of leveraging the benefits of piecewise linear units in the generative context. We propose a new generative model estimation procedure that sidesteps these difficulties.”

Goodfellow et al were proposing GANs and explained,

“In the proposed adversarial nets framework, the generative model is pitted against an adversary: a discriminative model that learns to determine whether a sample is from the model distribution or the data distribution. The generative model can be thought of as analogous to a team of counterfeiters, trying to produce fake currency and use it without detection, while the discriminative model is analogous to the police, trying to detect the counterfeit currency. Competition in this game drives both teams to improve their methods until the counterfeits are indistinguishable from the genuine articles.”

GANs: Potentially useful for semisupervised learning and multi-model settings

GANs are useful for semisupervised learning as well as situations that include unlabeled data or data where a fraction of the data samples are labeled. Generative models are also useful when seeking multiple correct answers that correspond to a single input (i.e., multimodal settings). In a follow-up tutorial, Goodfellow references

"Generative models can be trained with missing data and can provide predictions on inputs that are missing data. One particularly interesting case of missing data is semi-supervised learning, in which the labels for many or even most training examples are missing. Modern deep learning algorithms typically require extremely many labeled examples to be able to generalize well. Semi-supervised learning is one strategy for reducing the number of labels. The learning algorithm can improve its generalization by studying a large number of unlabeled examples which, which are usually easier to obtain. Generative models, and GANs in particular, are able to perform semi-supervised learning reasonably well.”

and

"Generative models, and GANs in particular, enable machine learning to work with multi-modal outputs. For many tasks, a single input may correspond to many different correct answers, each of which is acceptable. Some traditional means of training machine learning models, such as minimizing the mean squared error between a desired output and the model’s predicted output, are not able to train models that can produce multiple different correct answers."

Conclusion

This blog post focuses on concise high-level insights into GANs to help researchers assess whether to investigate GANs further. If interested in a tutorial, then check out the upcoming webinar “Generative Adversarial Networks: A Distilled Tutorial” presented by Nikolay Manchev, Domino’s Principal Data Scientist for EMEA. The webinar and complementary Domino project provide more depth than this blog post as they cover an implementation of a basic GAN model and demonstrate how adversarial networks can be used to generate training samples.

Domino Data Lab empowers the largest AI-driven enterprises to build and operate AI at scale. Domino’s Enterprise AI Platform provides an integrated experience encompassing model development, MLOps, collaboration, and governance. With Domino, global enterprises can develop better medicines, grow more productive crops, develop more competitive products, and more. Founded in 2013, Domino is backed by Sequoia Capital, Coatue Management, NVIDIA, Snowflake, and other leading investors.