Introduction to Deep Learning and Neural Networks

David Weedmark2021-11-18 | 12 min read

Phrases like deep learning and neural networks may be synonymous with artificial intelligence in the public’s minds, but for the data science teams that work with them, they are a breed of their own. Behind the dashboards of self-driving cars and below the online apps we use every day are a series of complex, interweaving algorithms that were inspired by the neurobiology of the human brain.

What Is Deep Learning?

Deep learning is a type of machine learning, or ML, designed to predict an output (Y) based on an input (X). It’s typically used when data with a large number of input attributes are involved. Deep learning works by first recognizing patterns between inputs and outputs and then using that information to predict new outputs when it’s given data it hasn’t encountered before. For example, a deep learning algorithm can predict a stock’s future performance by providing it with data on its performance in the past.

Deep Learning vs. Machine Learning

ML is a broad category of computer systems that can learn and adapt without human intervention by using algorithms that analyze data, discover patterns and draw inferences from those patterns. ML is a subset of artificial intelligence (AI). Deep learning is one subset of machine learning, while neural networks are a subset of deep learning.

Why Is Deep Learning Used?

Deep learning can have an advantage over other machine learning models when there is a lot of data to process and when the organization has the infrastructure to support its numerous calculations within a reasonable time. Because deep learning doesn’t allow for feature inspection and doesn't require extensive feature engineering, it’s particularly useful when you are dealing with large amounts of unstructured data.

The more data you have available to use, the more likely deep learning will be the better solution. As your data scales, deep learning becomes much more efficient. In a world where available data does nothing but scale week after week and year after year, it’s likely the adoption of deep learning will continue to escalate.

How Does Deep Learning Work?

Successful deep learning models are created and implemented using the data science lifecycle (DSLC). This has four stages: manage, develop, deploy and monitor.

- Manage: The problem statement is defined, algorithms are chosen, and data is acquired.

- Develop: Multiple models are trained using different algorithms and hyperparameters. The best performing models are then tested on new data to ensure they continue to perform as predicted. With deep learning models, overfitting is often a problem. This occurs when the model becomes too complex and is unable to adapt to new variables it hasn’t encountered before.

- Deploy: The model is moved to its production environment, and users are trained to use it.

- Monitor: The model is monitored to ensure it continues to run smoothly. If problems arise, like bias, concept drift or data drift, automatic monitors can notify the data science team immediately.

Deep learning algorithms analyze input data, find patterns in the data and then predict the output data. While it is the algorithm itself that does that, its effectiveness depends on both the quality of the data and how the data science team has tuned the algorithm's hyperparameters.

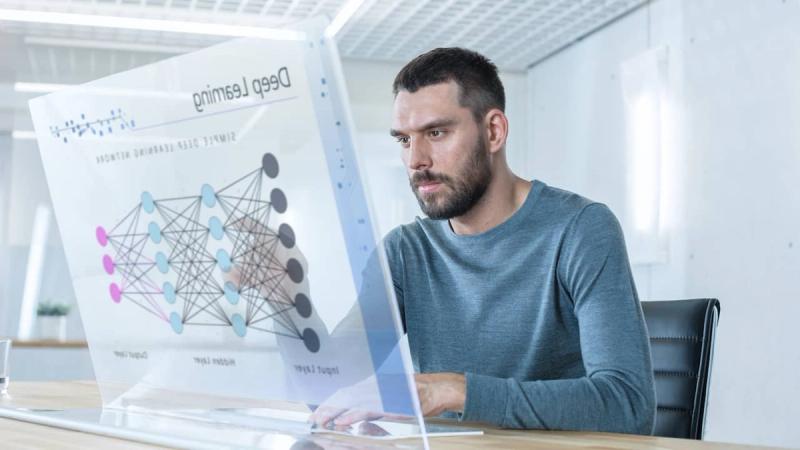

The algorithm works through the data in layers: between the layer of input data, and the layer of output data are multiple hidden layers where the algorithm does its work, sorting, analyzing and making connections between data points (nodes). This is where the word “deep” comes from in deep learning because there are usually many hidden layers between the input and output layers.

After the algorithm has generated predictions in the output layer, it then compares its prediction to the actual output and corrects itself in case of discrepancies using a method called backpropagation. Starting with the output, it goes through the layers backwards, tweaking its parameters until the results closely match the actual output.

The Role of Neural Networks in Deep Learning

Artificial neural networks are sets of algorithms that were inspired by the neurobiology of the human brain. These networks are comprised of artificial neurons that correspond to nodes on each hidden layer of data. Each artificial neuron uses an activation function, which contains a characteristic to turn it on.

Each node accepts multiple weighted inputs, processes them, applies an activation function to the summation of those inputs and then produces an output. The next node then takes the information from the nodes that preceded it and generates its own output.

An example of an activation function is the sigmoid function, which receives the linearly combined inputs of a neuron and rescales it to a range from 0 to 1. This is useful, because the function's output can be interpreted as a valid probability distribution. Moreover the sigmoid is differentiable, allowing the weights of the neuron to be tweaked via backpropagation in case its output does not match the desired target.

It would be overly simplistic to suggest that nodes are arranged in evenly separated layers. The structure of these layers can be exceptionally complicated, and different networks use different layers to give different results. A Feed Forward neural network is such a straightforward network. There are many other network topologies that are currently in use.

Challenges of Deep Neural Networks

Neural networks are certainly not the solution to every problem. With large amounts of complex data, they can be more efficient than other ML models, but the complexity of these models means that more time and resources are required to train, test and deploy them.

With smaller amounts of data, deep learning doesn’t have much of an advantage over other algorithms, like linear regression or SVM (support vector machine).

Opportunities for Growth

Deep learning is more resource-intensive than simpler algorithms. As CPUs and GPUs become more powerful each year, more organizations can take advantage of deep learning today than they were a few years ago. Of particular note are the advancements in GPUs by companies like NVIDIA. Because GPUs have more cores than CPUs, as well as higher memory bandwidth, they are exceptionally powerful for parallel computations, which neural networks require.

This is compounded by adopting distributed computing and cloud computing, which spreads the resource load across multiple systems or uses a more powerful server over the internet.

Another reason for the high adoption of deep learning is the advancement of algorithms themselves that have changed how deep learning works. For example, if you’re training a deep learning model, the RELU function will likely give you far better results than the SIGMOID function because it gives you fewer issues with the vanishing gradient problem.

Types of Learning in Deep Learning

Within deep learning, there are various learning techniques and algorithms used by data scientists and ML practitioners.

Supervised Learning

With the supervised learning technique, deep learning models are trained with output data corresponding to the input data. For example, if you were training a model to recognize email spam, you would supply the model with email data and specify which emails were spam and which were not. The model would then use backpropagation to tune itself to make its predictions match the output data it was supplied with.

Deep learning models that use supervised learning are often used in Natural Language Processing (NLP) which is designed to understand human language, as well as Computer Vision (CV) to process images and videos.

Unsupervised Learning

Unsupervised learning is used when the output data is not supplied to the model during training. In this case, the model is only given input data with the goal of uncovering potentially interesting patterns in the provided dataset.

Examples of neural networks using this technique are Deep Belief Networks (DBN), which are comprised of stacks of either Restricted Boltzmann Machines (RBMs) or Variational Autoencoders (VAEs), and can be used in either an unsupervised or a supervised setting. These are often applied to video recognition, motion capture, image classification or even image generation tasks.

Reinforcement Learning

Reinforcement learning involves training ML models to make a sequence of decisions. The model learns to achieve a goal in an unstructured, complex environment. When used with deep learning algorithms, this is known as Deep Reinforcement Learning (DRL). DRL is used in inventory management, demand forecasting, supply chain management, as well as financial portfolio management and stock market trading.

Transfer Learning

Transfer learning is a technique where a model used for one task is reused as the starting point for another model working on a second task. Transfer learning can be used for Natural Language Processing or Computer Vision. It’s particularly useful when the first model is already trained, speeding up development time. However, its benefits aren’t able to be determined until after the second model has been trained and tested.

Deep Learning in Action

For such a relatively new technology, deep learning has inundated every sector of business. For consumers, deep learning and neural networks are probably most familiar in today’s automotive technology, like the autopilot systems in cars produced by Tesla. Other examples of deep learning used today include:

- eCommerce: used to customize product recommendations to customers based on their unique behavior.

- Security: used to protect computer systems from viruses, as well as credit cardholders from fraud.

- Logistics: used to plan, monitor and modify shipping routes while predicting delivery times.

Because neural networks are universal approximators, there is practically nothing to limit their use in any field humans work in. One of the most difficult fields, and one that was considered impossible just a few years ago, is in the area of medical diagnostics. Yet, neural networks have been making significant inroads in this field as well, with some AI diagnosis models having the same, or slightly better, success at diagnosis over human doctors.

Final Thoughts

Training, testing and deploying deep learning models require a wealth of resources and tools, from comprehensive libraries and a platform that encourages and supports team collaboration and organizational governance.

David Weedmark is a published author who has worked as a project manager, software developer and as a network security consultant.