Domino 5.1 Simplifies Access to Data Sources to Improve Model Velocity

Bob Laurent2022-03-08 | 3 min read

Building a great machine learning model often starts with getting access to critical data – about patients in clinical trials, loans that have defaulted, transactions at individual stores, etc. Despite the hype around creating a “data lake” where all of a company’s data will reside, the reality for most companies is that data is often in a variety of different locations, both on-premises and in the cloud. And, the number of these locations grows quickly within larger organizations, with many different departments, different needs, and different storage requirements.

That’s why we’re adding connectors to an even greater variety of data sources in Domino 5.1. This release, which is available today, builds upon the innovation we brought to market with Domino 5.0 in January to increase model velocity for our many customers. By removing common DevOps barriers, including the need to write code to install drivers and specific libraries, we’re making it easier for data science teams to get access to important data.

How it Works

Last month, Andrew Coleman wrote a detailed blog explaining how data scientists can use pre-built connectors as part of a consistent access pattern for both tabular- and file-based data. In Domino 5.0 we developed native data source connectors for Snowflake, Amazon S3, and Amazon Redshift.

Today, as part of the Domino 5.1 launch, I’m excited to announce the addition of six more connectors for Microsoft Azure Data Lake Storage, Google Cloud Storage, Oracle Database, Microsoft SQL Server, PostgresSQL, and MySQL:

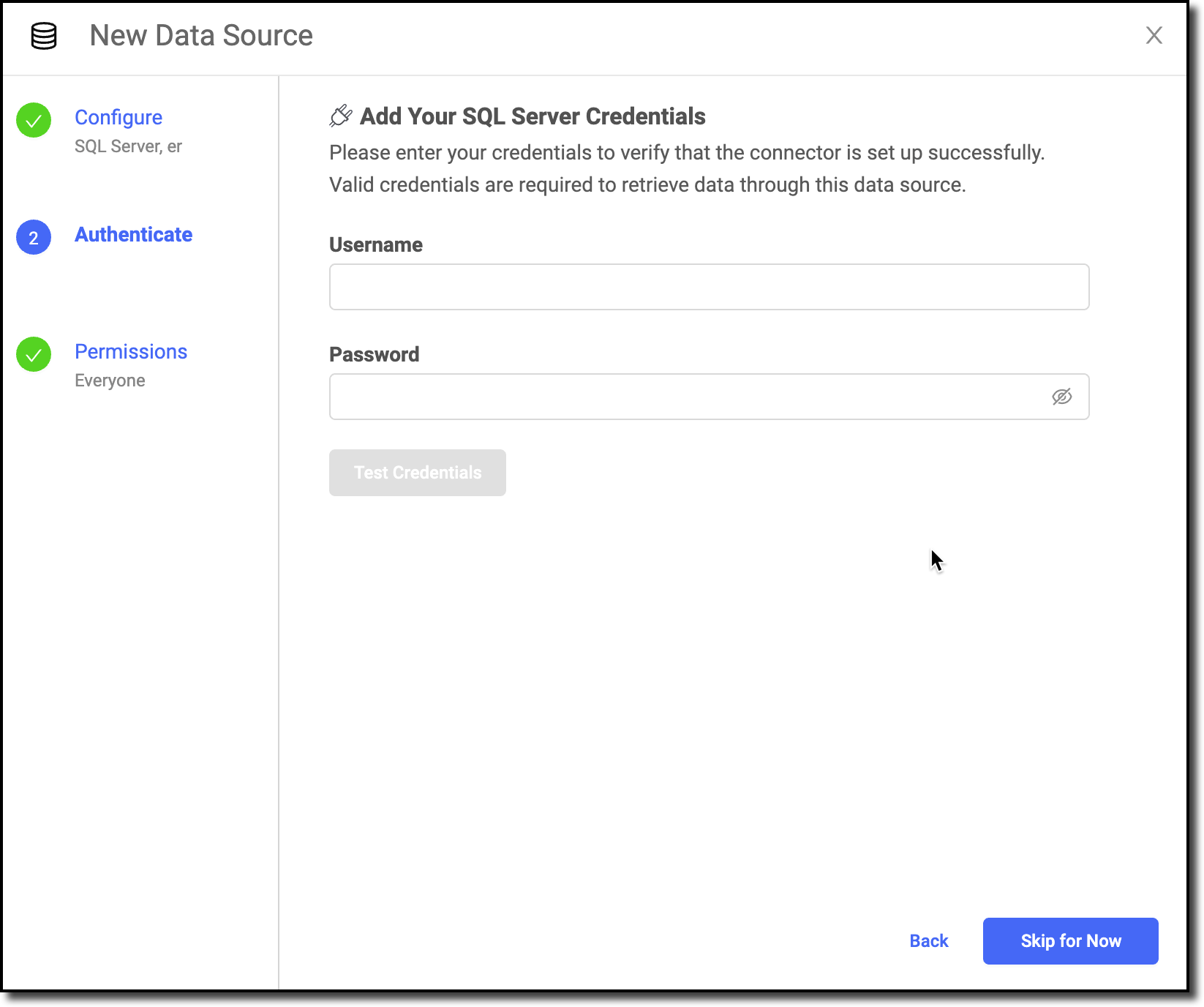

Rather than spending time writing scripts to access data, all a user has to do to set up a connector is go to the “Data” section in Domino, click on “Connect to External Data”, pick the appropriate data source, and fill in basic information including their login credentials. That’s it! Connection properties are stored securely as an access key and secret key in an instance of Hashicorp Vault that is deployed with Domino.

Domino 5.1 also simplifies access to generic External Data Volumes (EDV) to mount and access data on remote file systems – allowing data scientists to use more types of data for better experimentation. Domino lists the projects using each registered EDV to provide insight into how data is being used, and for what purposes.

Conclusion

If you’re keeping score, we now have pre-built connectors for all three of the major cloud providers, as well as to Snowflake. And, if there’s a data source we haven’t built a native connector for, users can create new connectors and share them with colleagues to help democratize access to important data.

For detailed information about how to configure data sources in Domino, you can view our documentation. And, if you missed our Domino 5.0 launch event featuring Atul Gawande (renowned surgeon, author, and performance improvement expert) and Dr. Najat Khan (Chief Data Science Officer and Global Head of Strategy & Operations for Research & Development at the Janssen Pharmaceutical Companies of Johnson & Johnson), you can watch the replay here.

Bob Laurent is the Head of Product Marketing at Domino Data Lab where he is responsible for driving product awareness and adoption, and growing a loyal customer base of expert data science teams. Prior to Domino, he held similar leadership roles at Alteryx and DataRobot. He has more than 30 years of product marketing, media/analyst relations, competitive intelligence, and telecom network experience.