Domino AI Gateway streamlines and governs access to large language models

John Alexander2024-03-10 | 5 min read

Businesses have embraced the promise of LLMs. They are eager to quickly add value and reduce costs using SaaS-based LLM services like ChatGPT from Open AI and Claude from Anthropic. Compared to using custom-built models, leveraging an external model provider via API access offers a shorter time to market, lower maintenance, and better performance for many use cases. No wonder data scientists and users are keen to access these services.

Balancing risk and reward

But, while API access to LLMs shows excellent promise, enterprises must balance the potential revenue gains and cost savings against inherent risks. IT leaders worry that broad access to LLMs comes with various challenges that include:

- Securing API keys and eliminating plain-text key sharing around the organization. Or even worse – accidentally committing keys into the codebase.

- Avoiding vendor lock-in or depending on a single provider for all AI services.

- Controlling costs and setting usage limits. These should consider the business unit, the use case, or overall urgency.

- Managing permissions to control access to each specific model.

- Logging all activity to maintain a record of the request history.

- Ensuring data scientists have a good experience and best practices to follow.

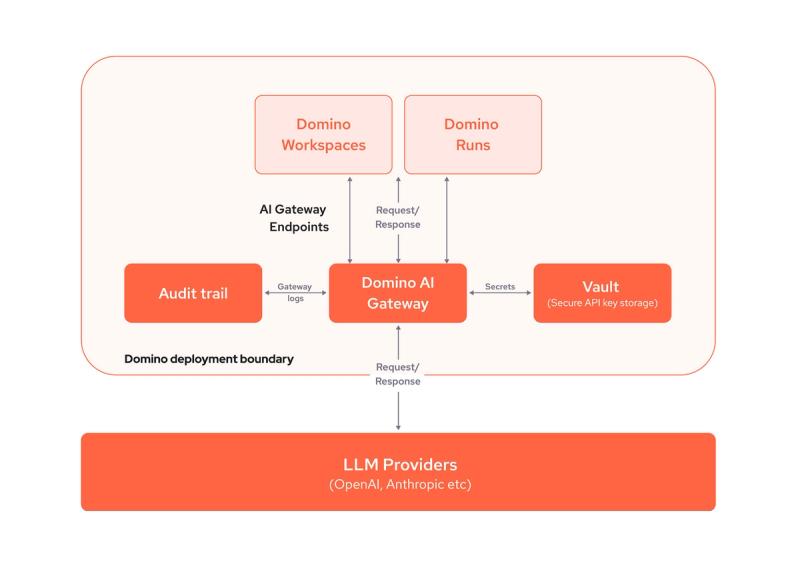

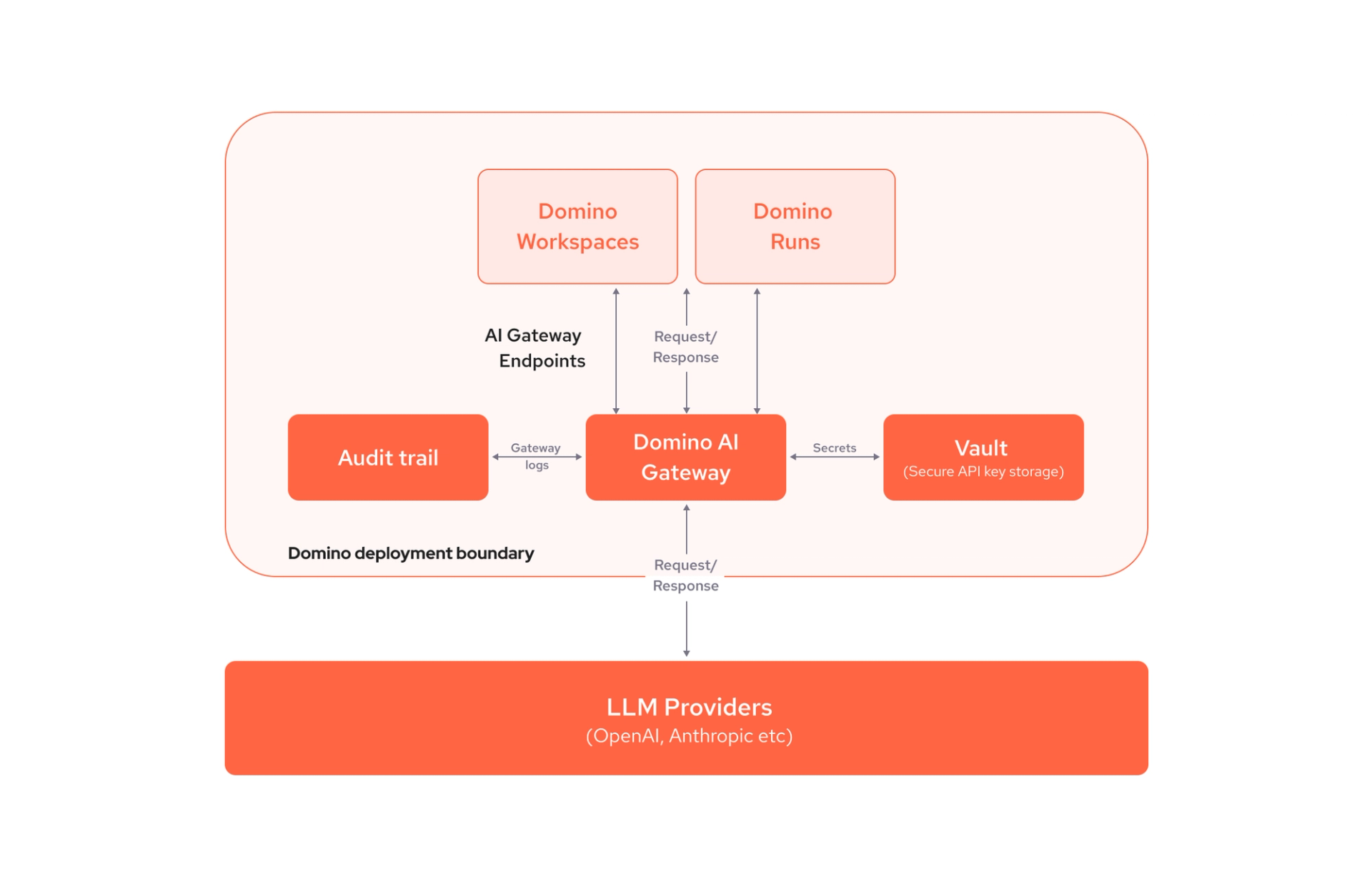

Domino AI Gateway addresses the above problems by hosting an internal service to centralize governance and secure connections to external LLMs. More specifically, the service offers:

- Secure API key management: Prevent accidental leaks and securely manage API keys.

- LLM endpoint management: Admins can configure several endpoints representing a model from a particular vendor, such as Open AI, Anthropic, or Hugging Face. Domino stores the credentials for each endpoint securely. This approach allows you to create multiple endpoints with different credentials. As a result, you can separate billing accounts by organization or project.

- Controlled user access: Admins can manage permissions for each endpoint individually. They can give access only to specific users or user groups.

- Detailed activity logs: Maintain a comprehensive log of LLM activity for thorough auditing.

- Rapid LLM switching: Admins can reroute requests centrally and switch from one LLM provider to another. All the data science team must do is update its code to the name of the new LLM provider.

- Consistent Interface for Data Scientists: Provide a streamlined, consistent interface for data scientists to interact seamlessly with multiple LLM providers

- Portable code experience: Domino AI Gateway balances easy API connectivity with control. It mitigates many of the LLM usage challenges we mentioned above. With Domino, users can access multiple external Large Language Model (LLM) providers directly from within their workflow, accelerating development. At the same time, admins control user roles and access levels and ensure users follow security and audit best practices.

LLM access guardrails

Domino AI Gateway simplifies LLM endpoint permission management. With role-based access controls, admins can give access and permissions to specific users or user groups. AI Gateway logs all LLM requests. The logs provide a complete request history and establish an audit trail.This audit trail offers model approvers an added measure of visibility and confidence.

Domino AI Gateway secures API key storage by eliminating plain-text key sharing around the organization. Or even worse – accidentally committing keys into the codebase. When an admin creates an endpoint, the API key is automatically stored securely in Domino’s central vault service. That key is never exposed to data scientists and users when accessing the LLM.

To deliver these capabilities, all API calls to external LLMs must be routed through AI Gateway service-defined endpoints.

Convenient access to LLMs

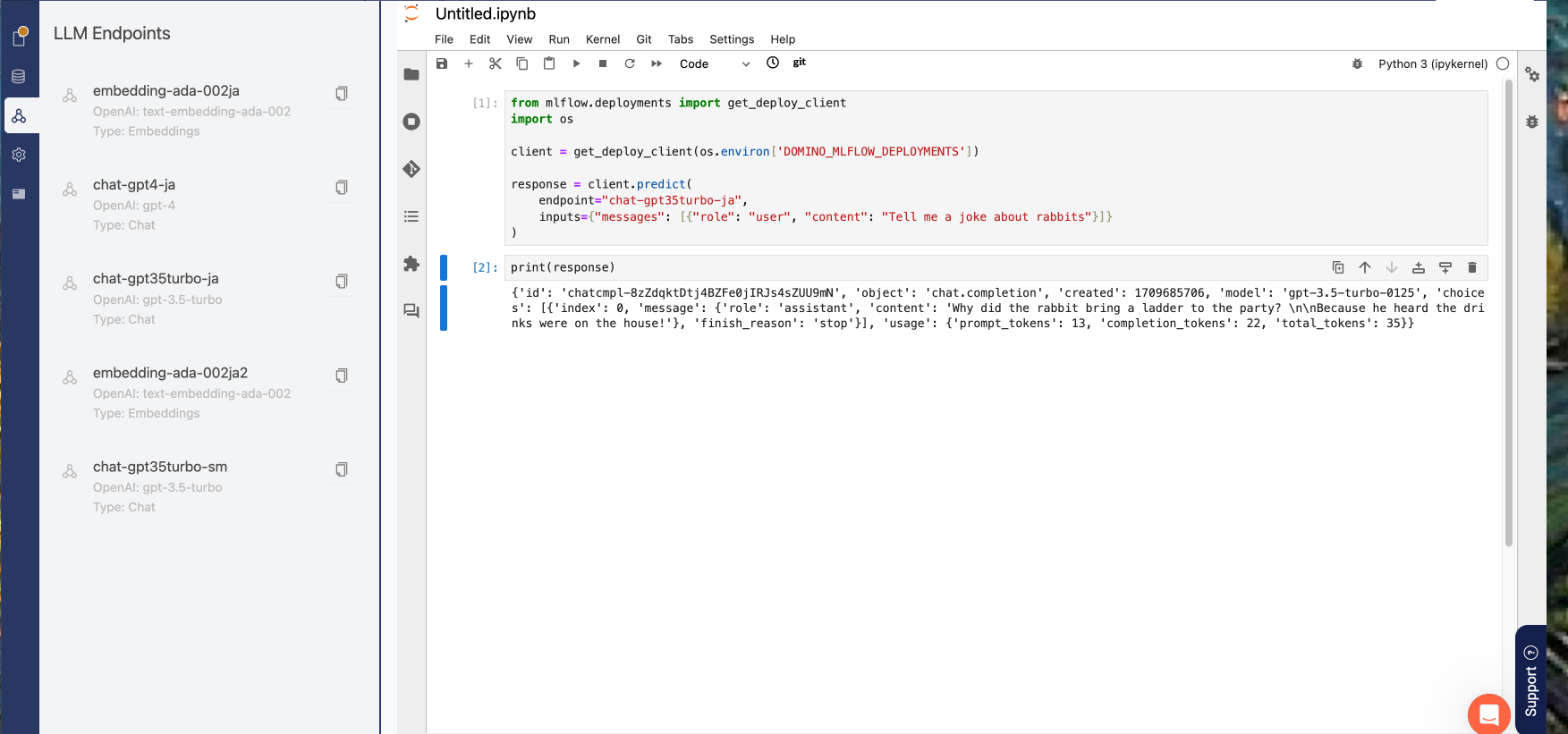

Developing LLM applications with Domino AI Gateway is seamless. The available LLM endpoints list is next to your Jupyter Notebook UI, as in the following screenshot. Each endpoint in the list provides sample code that enables you to connect to any of the listed LLMs quickly.

AI Gateway unlocks the full potential of including multiple external LLMs within your Domino workflow. It gives data scientists and developers flexibility and freedom of choice, and gives IT Admins controls and the flexibility to avoid lock-in to a particular vendor or service. Enjoy the ease of use, security, and auditability features Domino AI Gateway brings to your generative AI application development efforts. Welcome to a new era of enhanced LLM integration, security, and productivity.

To see the Domino AI Gateway in action, please join us for the upcoming Scaling GenAI webinar on Mar 19, 2024 here. The Domino platform delivers enterprise AI built by everyone, scalable by design, and responsible by default. Learn more about how to make AI accessible, manageable, and beneficial for all.

John is a Director of Technical Marketing at Domino Data Lab, a leading platform for data science and machine learning. He has over 25 years of experience in applying technology to real world situations. He also loves to share his knowledge and passion for AI through teaching, writing, and speaking.