8 modeling tools for building complex algorithms

David Weedmark2021-08-09 | 6 min read

For a model-driven enterprise, having access to the appropriate tools can mean the difference between operating at a loss with a string of late projects lingering ahead of you or exceeding productivity and profitability forecasts. This is no exaggeration by any means. With the right tools, your data science teams can focus on what they do best – testing, developing and deploying new models while driving forward-thinking innovation.

What are modeling tools?

In general terms, a model is a series of algorithms that can solve problems when given appropriate data. Just as the human brain can solve problems by applying lessons we learn from past experiences to new situations, a model is trained on a set of data and can solve problems with new sets of data.

Importance of modeling tools

Without uncurtailed and unlimited access to modeling tools, data science teams will be handcuffed in their work. Open-source innovations abound and will continue. Data scientists need access to modern tools that are blessed by IT but available in a self-service manner.

Types of modeling tools

Before selecting a tool, you should first know your end goal – machine learning or deep learning.

Machine learning identifies patterns in data using algorithms that are primarily based on traditional methods of statistical learning. It’s most helpful in analyzing structured data.

Deep learning is sometimes considered a subset of machine learning. Based on the concept of neural networks, it’s useful for analyzing images, videos, text and other unstructured data. Deep learning models also tend to be more resource-intensive, requiring more CPU and GPU power.

Deep learning modeling tools:

PyTorch: Free, open-source library primarily used for deep learning applications like natural language processing and computer vision. It was based on the Torch library. However, it favors Python rather than the Lua programming language used by Torch. Much of PyTorch was developed by Facebook’s AI Research lab, which has respected the modified BSD open source licensing. Examples of deep learning models that were built on PyTorch include Tesla’s Autopilot and Uber’s Pyro.

TensorFlow: Similar to PyTorch, this is an open-source Python library created by Google. One of its primary benefits compared is that it supports additional languages beyond Python. It’s also considered to be more production-oriented than PyTorch. However, this can be debatable since both tools continuously update their features. You can create deep learning models within TensorFlow or use wrapper libraries for models built on top of TensorFlow. It’s used by Airbnb to caption and categorize photos, while GE Healthcare uses it to identify anatomy on MRIs of the brain.

Keras: API built on top of TensorFlow. While TensorFlow does have its own APIs to reduce the amount of coding required, Keras extends these capabilities by adding a simplified interface on top of the TensorFlow library. Not only does it reduce the number of user actions required for most tasks, but you can also design and test artificial neural network models with just a few lines of code. It’s used by novices to learn deep learning quickly and teams working on advanced projects, including NASA, CERN, and NIH.

Ray: Open-source library framework that offers a simple API for scaling applications from a single computer to large clusters. Ray includes a scalable reinforcement learning library called RLib and a scalable hyperparameter tuning library called Tune.

Horovod: Another distributed deep learning training framework that can be used with PyTorch, TensorFlow, Keras and Apache MXNet. It’s similar to Ray in that it is designed primarily for scaling across multiple GPUs simultaneously. Initially developed by Uber, Horovod is open source and available through GitHub.

Machine learning modeling tools

Scikit-Learn: One of the most robust libraries for machine learning in Python. The library contains an assortment of tools for machine learning and statistical modeling including classification, regression, clustering and dimensionality reduction and predictive data analysis. It’s an open-source but commercially usable library based on the BSD license, built on NumPy, SciPy and matplotlib.

XGBoost: Another open-source machine learning library that provides a regularizing gradient boosting framework for Python, C++, Java, R, Perl and Scala. Not only does it offer stable model performance across a variety of platforms, but it’s also currently one of the fastest gradient boosting frameworks available.

Apache Spark: An open-source unified analytics engine designed for scaling data processing requirements. It offers an uncomplicated user interface for multiple programming clusters with parallel data. It’s also very fast.

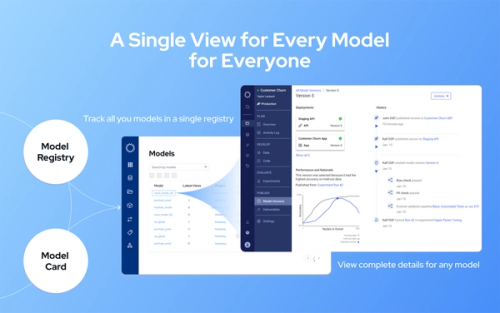

Modeling tools with Domino Data Lab

While these are some of the most popular tools used for AI/ML models today, this is by no means an exhaustive list. To explore the functionality of these tools within the same platform, take a look at Domino’s Enterprise MLOps by watching a quick demo here.

David Weedmark is a published author who has worked as a project manager, software developer and as a network security consultant.

Summary