Summarize product feedback and respond

Generating Customer Service Email Responses Using Advanced Text Generation Models through Amazon Bedrock

This template showcases how advanced text generation can enhance customer service applications using Amazon Bedrock. Through three dynamic Jupyter notebooks, we demonstrate innovative ways to tackle a real-world challenge: responding to customer support inquiries.

- Zero-shot Prompting with Amazon Titan (via Boto3): Leverage Amazon Titan Text using Boto3, the official Amazon Web Service (AWS) SDK for Python, to generate responses from a prompt without any prior examples or additional context. This approach shows how to directly use Amazon Titan (a large language model available through Amazon Bedrock) to handle tasks efficiently by relying only on the instructions provided.

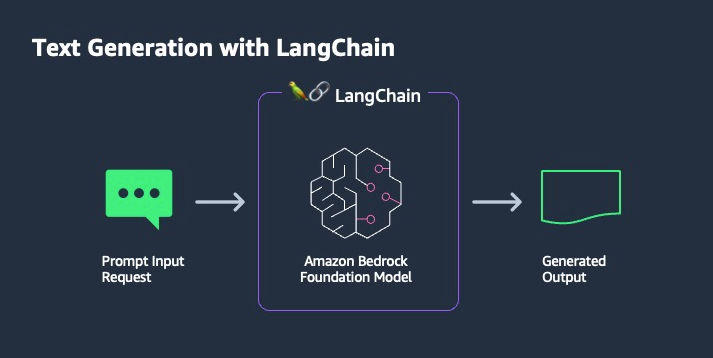

- Zero-shot Prompting with Anthropic’s Claude & LangChain: Explore how the same use case as above but with Claude, a conversational large language model (LLM), using the LangChain framework. This integration highlights Bedrock's flexibility and Claude's power to generate nuanced responses with minimal input. Anthropic’s Claude is a high-performance LLM optimized for dialogues, conversations, and content generation. Claude can be accessed through Amazon Bedrock. LangChain provides a robust framework for integrating language models into your applications. The advantage of using Langchain lies in simplifying the process of document loading, request formatting, embedding creation, and building workflows for question-answering tasks.

3. Contextual Text Generation with LangChain: Lastly, take it a step further by enriching the prompt using PromptTemplate with the contents of an actual email from a dissatisfied customer. Using Amazon Bedrock and LangChain, watch Anthropic Claude’s LLM craft a more precise and empathetic response by drawing on the provided context.

This template provides multiple contextual generation options—from quick, zero-shot prompts to context-driven responses—demonstrating the power of LLMs through Amazon Bedrock. Whether leveraging Amazon Titan via Boto3 for straightforward efficiency or using Anthropic’s Claude with LangChain for more nuanced, conversational interactions, these tools unlock new possibilities for enhancing customer support. The output has more relevant details by enriching inputs with contextual information, such as actual customer emails.

Explore these advanced strategies today to redefine your customer service experience, drive efficiency, and boost customer satisfaction!