Model Registry

Centralized model tracking

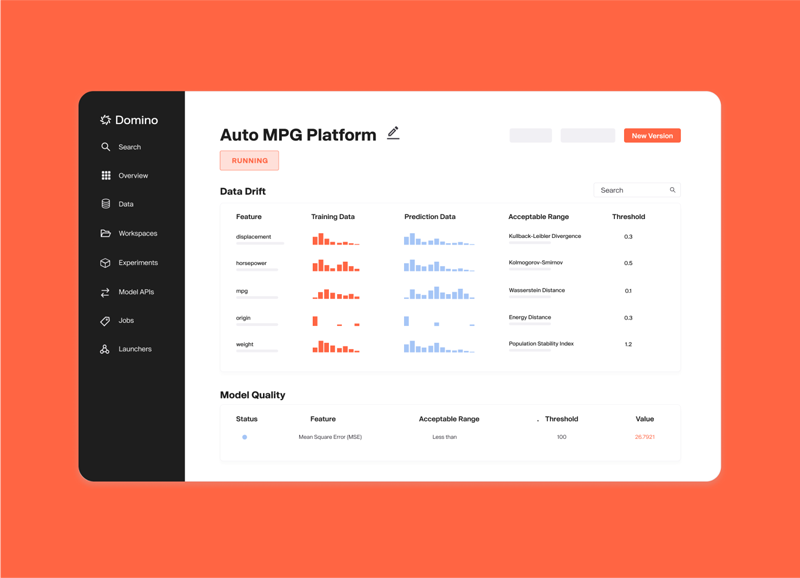

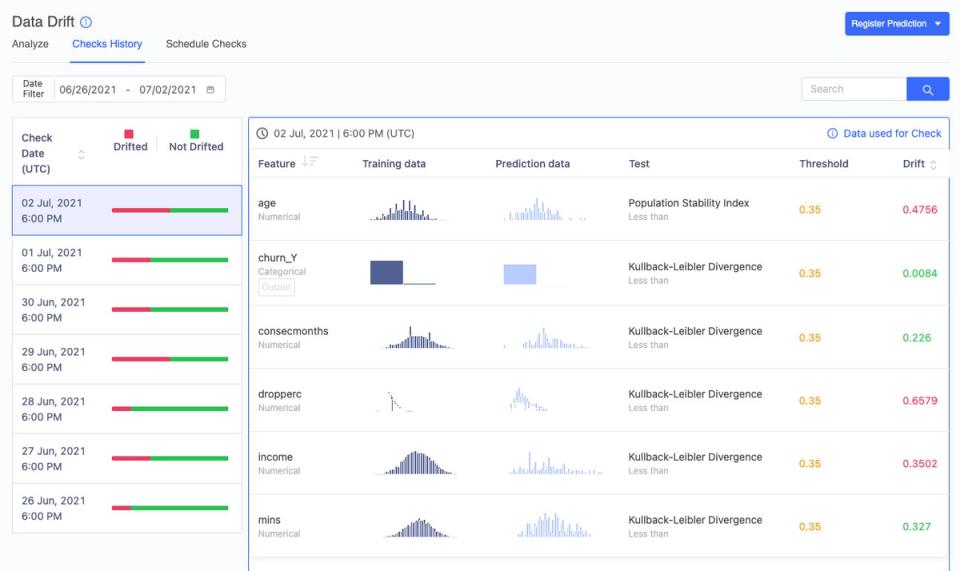

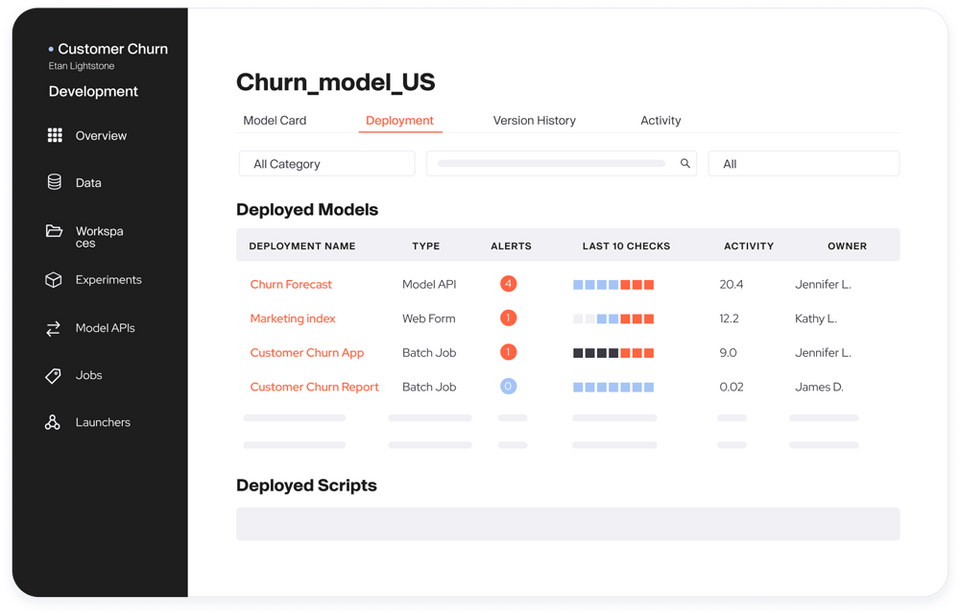

Track all your models regardless of where they were trained. With Domino Model Registry, you get complete lineage tracking for auditability using integrated model cards. The model registry offers a central repository of all models, streamlines iterative improvement, and facilitates stakeholder reviews and approvals for transitioning models from development to production.