Bridging MLOps and LLMOps: Ray Summit Talk

2023-10-04 | 2 min read

Domino's Ahmet Gyger recently presented at Anyscale's Ray Summit. Ahmet focused on the unique challenges enterprises face as they look to scale Generative AI applications based on Large Language Models (LLMs). While enterprises may be adept at managing the lifecycle of traditional AI models, LLMs have unique constraints. The development process is different - especially around model evaluation. Deployment is tricky even for experienced IT hands. And LLM monitoring is still in the formative stages.

Ahmet touches on what enterprise teams working with LLMs look for:

- Access to the latest and greatest models, especially with innovation happening at the current furious pace

- Ability to interact with models using prompts and RAG, the latter with a choice of using one of the many vector database solutions

- Orchestrating and incorporating generative models into workflows

- Model deployment and hosting as APIs and applications

- Governance and alignment across teams and throughout the generative AI lifecycle

- Deployment flexibility to any cloud based on data or infrastructure availability

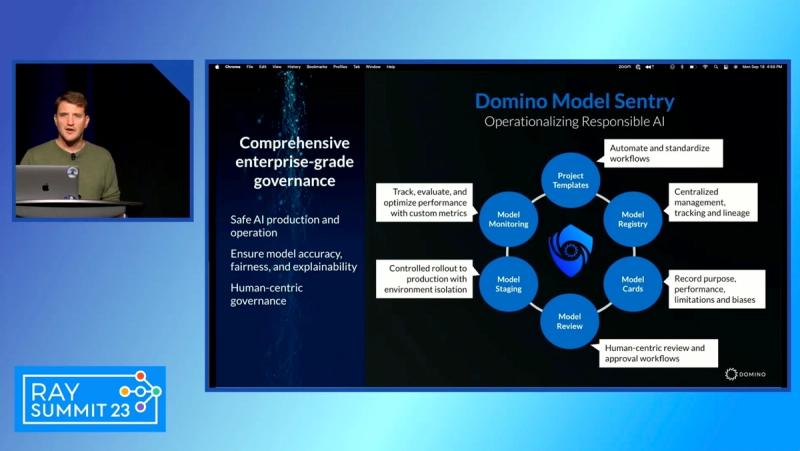

The LLM lifecycle remains complex. It touches on many systems, uses multiple technologies, and requires new skill sets. As a result, GenAI application releases mandate strong alignment between teams. Enterprises must define and manage budgets. They have reputations to protect and risks to mitigate. They have to ensure their model releases follow responsible AI practices. That's where Domino Sentry fits, providing a responsible AI model release framework. Ahmet walks through Model Sentry's benefits and explains how Domino addresses LLMOps needs in a corporate environment.

If you want to learn more, take 13 minutes of your day and watch the presentation yourself!