What AI leaders really think about responsible AI governance

Domino2024-09-19 | 5 min read

Organizations are increasingly recognizing the need to leverage AI responsibly — to accelerate AI innovation, minimize business risk, and comply with upcoming regulations — but are facing a widening AI governance gap. According to Domino Data Lab's latest REVelate survey, 97% of organizations have set goals for responsible AI. Yet, despite this ambition, nearly half (48%) of AI leaders acknowledge that their organizations lack the resources needed to fully implement AI governance frameworks.

Responsible AI: A strategic imperative

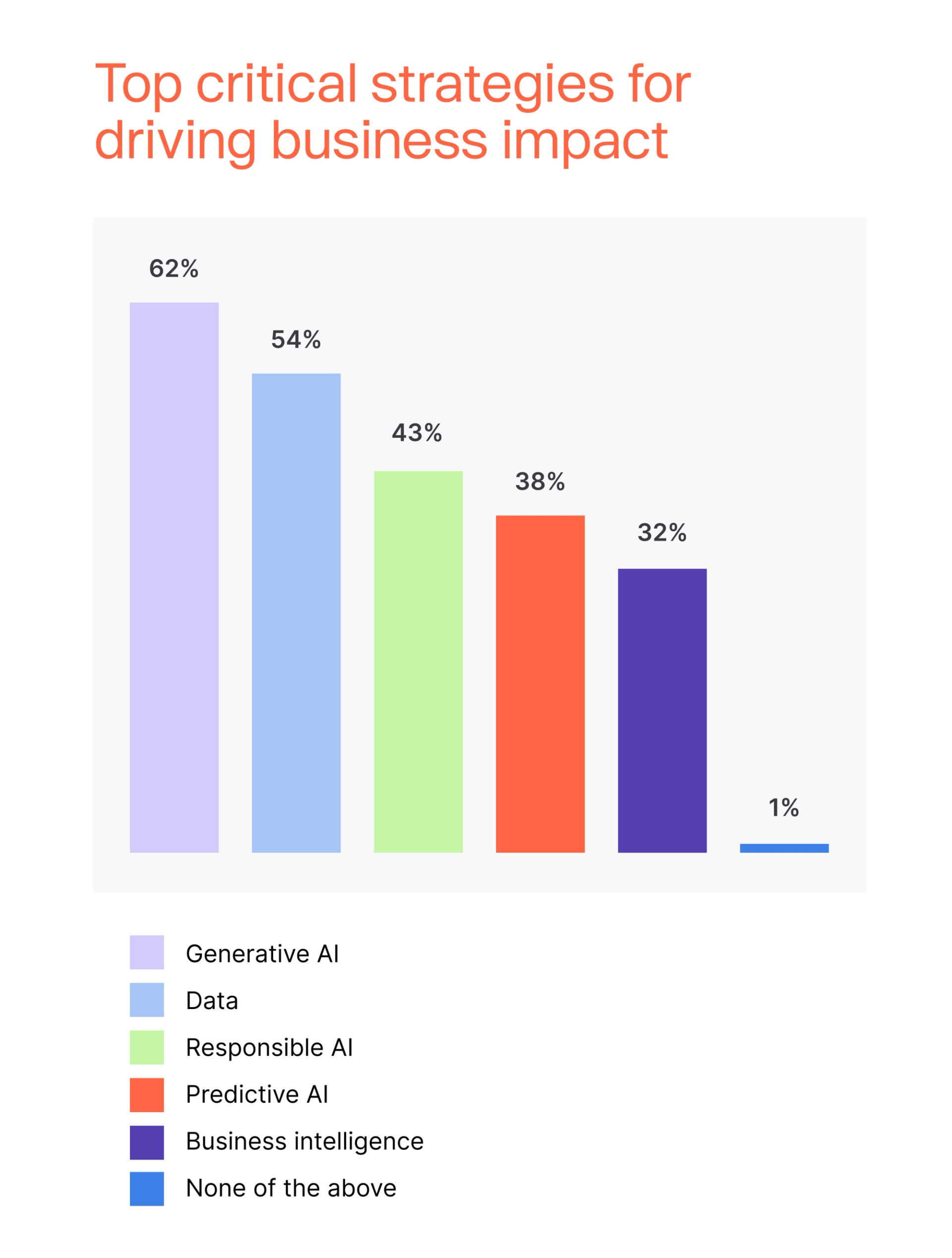

The recent REVelate survey reveals that responsible AI is emerging as a top strategic priority for organizations aiming to unlock the full potential of models. Nearly half of the respondents (43%) rate responsible AI as “extremely critical” to driving business impact. This is especially notable when compared to traditional enterprise priorities like business intelligence, which only 32% of leaders view as crucial to growth. Further emphasizing this trend, a recent study by BARC found that 95% of organizations are planning to update or replace their AI governance frameworks to meet evolving expectations and ensure AI's responsible use.

The high stakes of ignoring AI governance

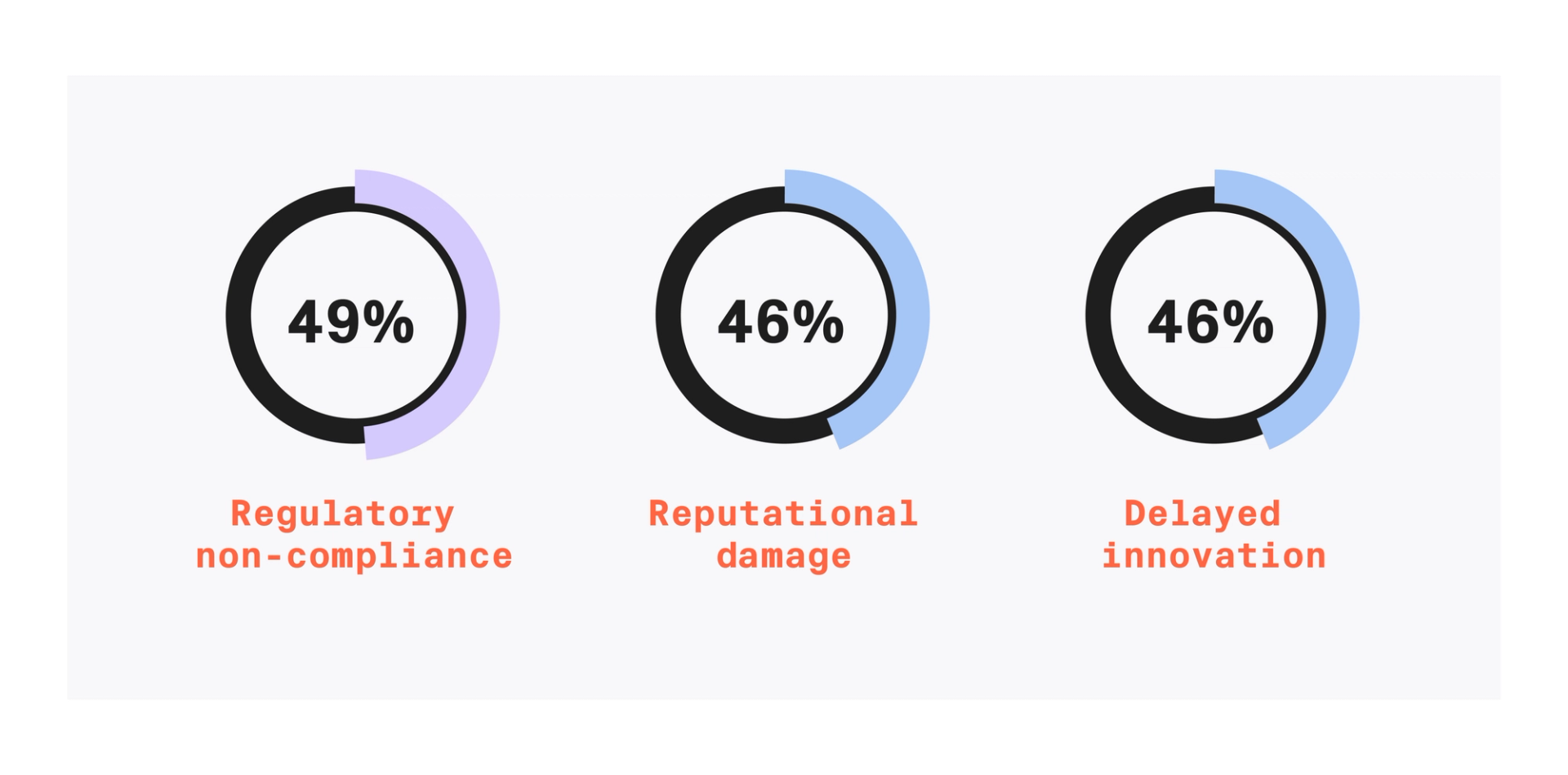

The risks of neglecting AI governance are stark. Regulatory violations topped the list of concerns, with 49% of respondents highlighting them as the biggest risk. Fines for non-compliance, such as those imposed by the EU AI Act, can reach up to 7% of global annual revenue, making the cost of failure substantial. Reputational damage and delayed innovation, each cited by 46% of respondents, are additional concerns. Companies that fail to govern AI risk losing customer trust and falling behind in the race to innovate.

The survey also underscores the financial burden of poorly governed AI; 34% of respondents note trepidation over potential increased operational costs. This illustrates how the absence of robust governance can drain resources that would be better spent on innovation and growth.

AI governance: A balancing act for innovators

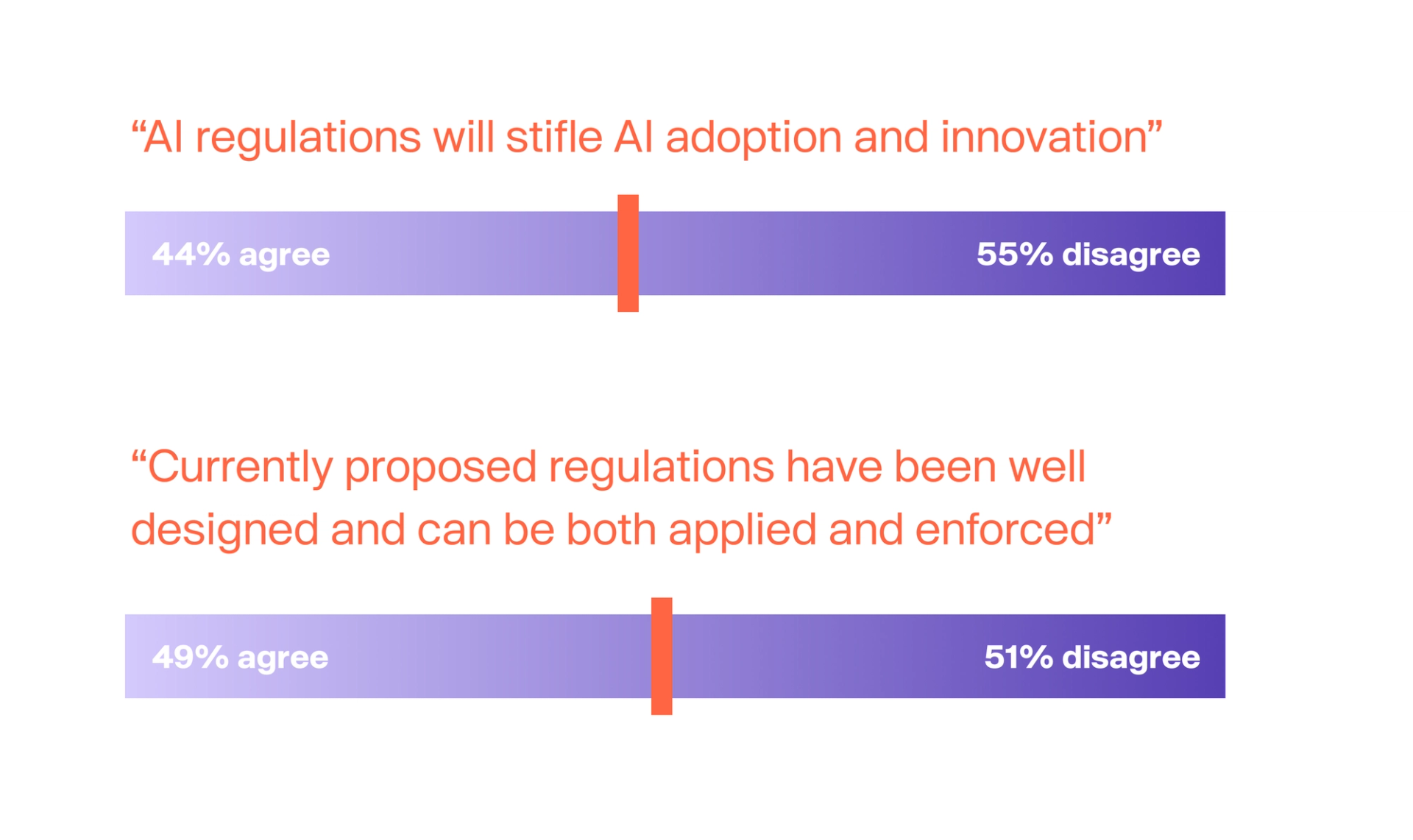

AI leaders are cautiously optimistic about the future of AI regulations. While 71% believe regulations will ensure AI is used safely, there are concerns about the balance between fostering innovation and regulatory overreach. Nearly half (44%) of respondents worry that stringent regulations could slow down AI adoption, while 56% see regulation as essential to safeguarding AI’s integrity and ensuring long-term success.

The survey reveals a split in opinions about the effectiveness of current regulations. Just over half (51%) of respondents doubt that regulatory frameworks are enforceable in their current form, highlighting the ongoing work required to define and implement AI governance practices.

Key approaches to implementing AI governance

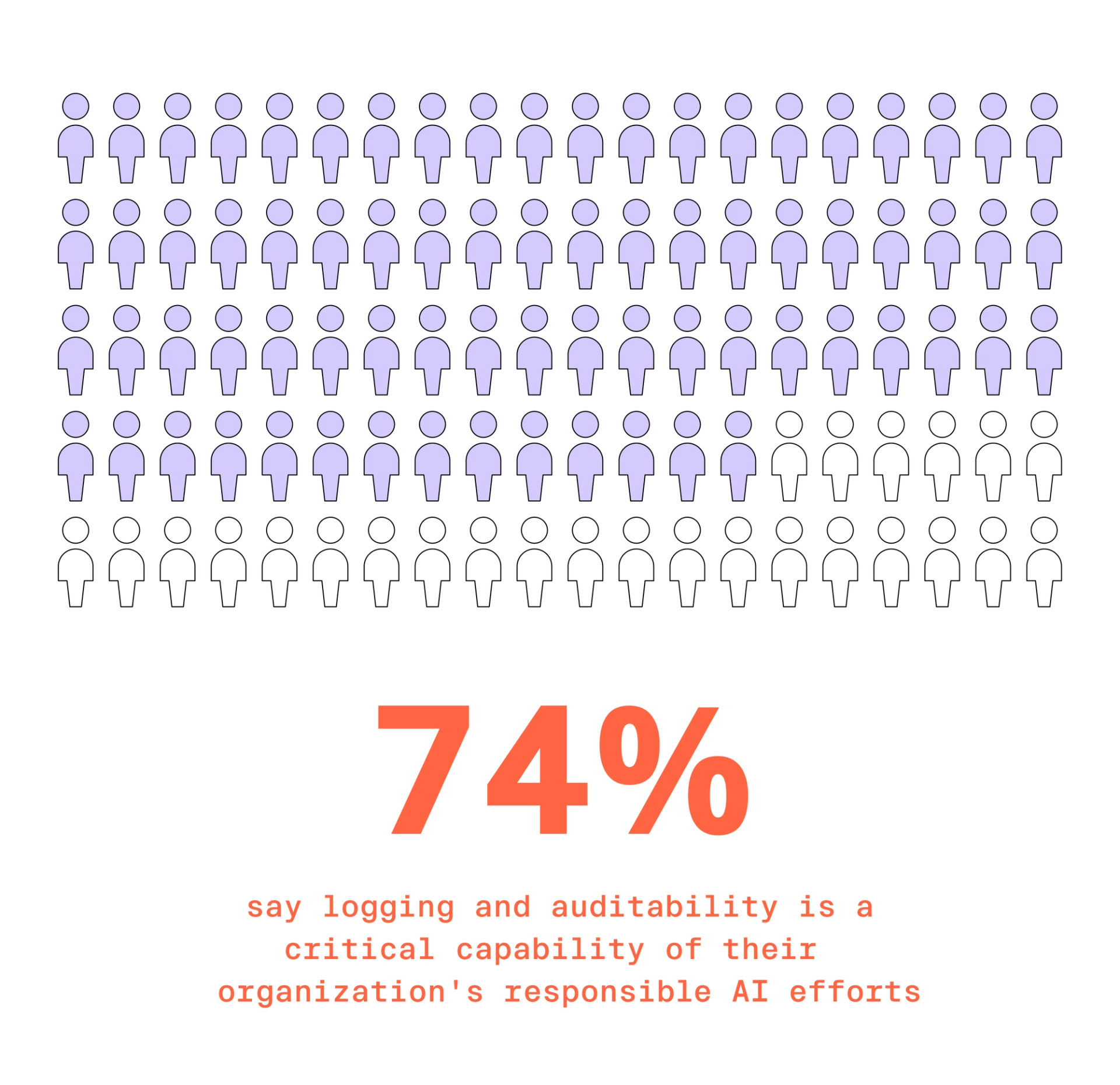

To meet these challenges, enterprises are prioritizing governance frameworks that translate responsible AI principles into practice. The survey found that 47% of organizations are focusing on defining responsible AI principles, while 44% are deploying governance platforms that ensure those policies are applied consistently across the AI lifecycle. Auditing (74%), reproducibility (68%), and monitoring (61%) emerge as the most critical platform capabilities needed to support responsible AI.

AI ethics boards and training programs, while often discussed as governance solutions, are taking a backseat to more actionable governance frameworks, with only 29% of respondents prioritizing ethics boards and 17% focusing on training.

Overcoming the challenges of AI governance

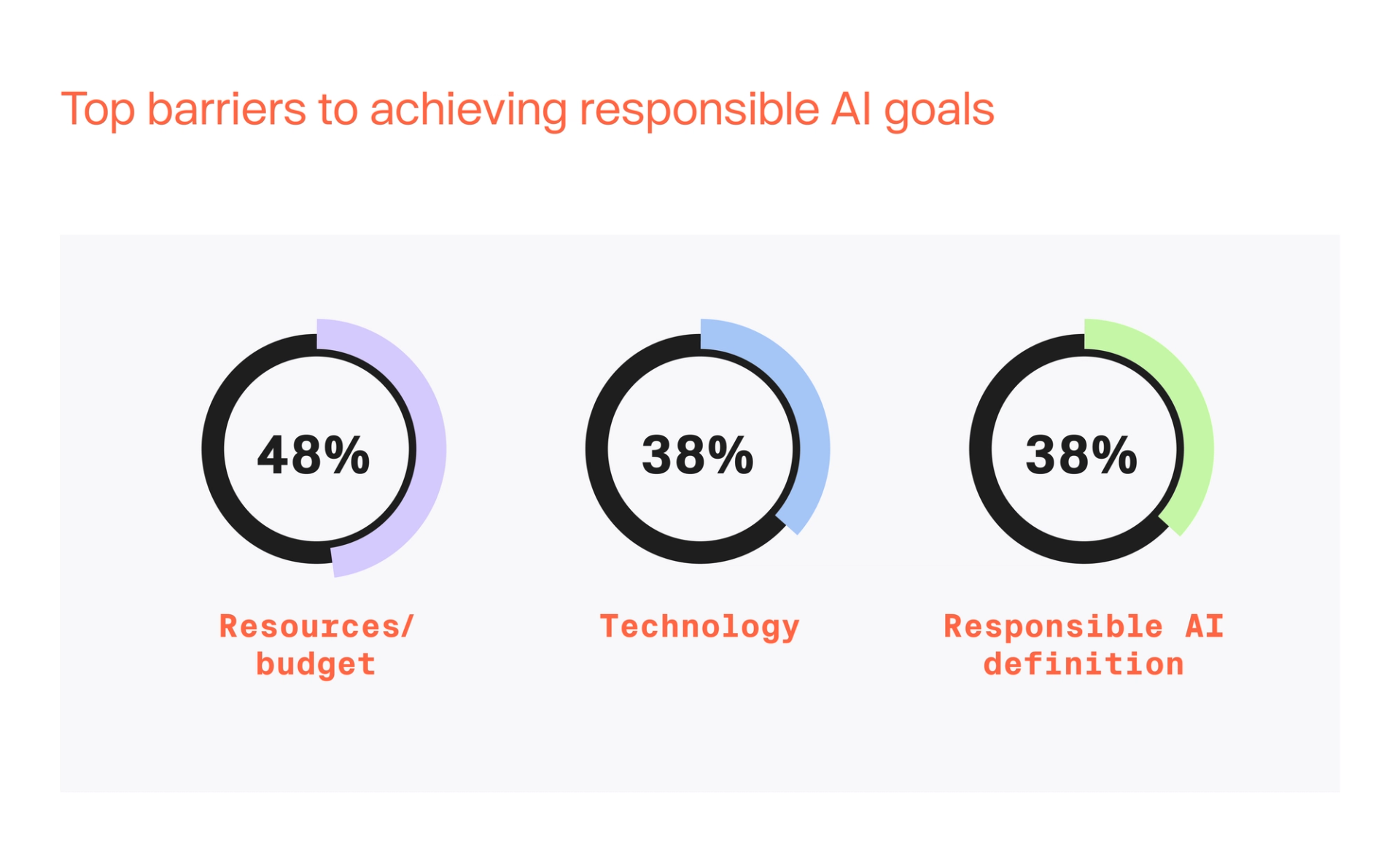

Despite the growing importance of AI governance, organizations face significant obstacles. Nearly half (48%) of survey respondents cite resource constraints as the primary barrier to implementing governance frameworks. Additionally, 38% report challenges with integrating the right technologies, such as tools for auditing and monitoring, into their AI processes.

The difficulty of defining responsible AI across complex organizations further complicates governance efforts. 38% of respondents struggle with inconsistent definitions of responsible AI, making it hard to implement policies uniformly across departments.

Conclusion

Domino Data Lab’s 2024 REVelate survey highlights the growing recognition of responsible AI as a critical driver of business success. However, the path to achieving it is fraught with challenges, including regulatory compliance, resource constraints, and the need for advanced governance technologies. As AI continues to revolutionize industries, organizations that master governance will be well-positioned to lead the way in innovation while mitigating risks and ensuring compliance.

For more insights on AI governance and the latest best practices, download the full REVelate survey results here.

Domino Data Lab empowers the largest AI-driven enterprises to build and operate AI at scale. Domino’s Enterprise AI Platform provides an integrated experience encompassing model development, MLOps, collaboration, and governance. With Domino, global enterprises can develop better medicines, grow more productive crops, develop more competitive products, and more. Founded in 2013, Domino is backed by Sequoia Capital, Coatue Management, NVIDIA, Snowflake, and other leading investors.