Deep Learning & Machine Learning Applications in Financial Services

Gourav Singh Bais2022-08-10 | 39 min read

In the last few years, machine learning (ML) and deep learning (DL) have become a core part of fields like healthcare, retail, banking, and insurance. This list can go on indefinitely—there's almost no field where machine learning is not applied to improve the overall efficiency and accuracy of systems. As the world's economy has rapidly grown, so has the need to do things automatically.

When we talk about the economy, banking and insurance, sometimes referred to as financial services, are two of the primary industries that come to mind. According to a report from Accenture, AI solutions may add up to one billion USD in value to financial services in upcoming years. There exists not only one, but several reports that discuss how the integration of AI with financial services can increase the potential benefits.

The whole financial domain, including both banking and insurance, is increasingly shifting to the online platform. Things like new user registration, identity verification, transactions, and purchasing financial services and insurance policies can all be done online. With this shift has come a need for process automation, security, and expedited, optimized handling of customer queries. Machine learning and deep learning embedded in decisioning systems are one-stop solutions for these needs. They help reduce manual tasks and add value to the existing processes, improving the whole finance system. Things like predicting credit scores, evaluating customer profiles for different types of loans, handling customer queries using chatbots, and detecting fraud in banking and insurance are only some of the major use cases that organizations can achieve with the help of ML.

In this article, you'll learn more about how machine learning and deep learning can improve financial services. You will also be introduced to different financial use cases, and machine-learning algorithms that are applied in those sectors.

Why Machine Learning and Deep Learning for Financial Services?

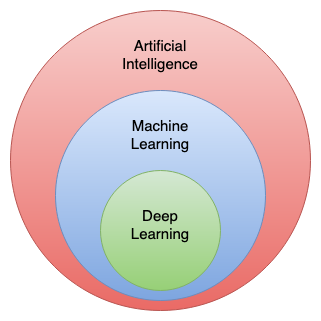

Machine learning is the branch of computer science that uses mathematics and statistics to analyze data and make predictions. Deep learning is a subfield of machine learning that uses neural networks, in particular, to perform more complex tasks involving unstructured data. Machine learning is used extensively for tasks like regression, classification, and clustering that work with tabular data, or data arranged in rows and columns format. This is often called a traditional AI approach. Deep learning is preferred for more complex tasks such as processing images, voice, and text. To train different neural network models, deep learning requires a huge amount of data and a powerful system in terms of memory and processing. To learn more about the algorithms and models that are part of machine learning and deep learning, look at Introduction to Deep Learning and Neural Networks.

Now that you have been introduced to ML and DL, let's discuss some of the major benefits of using them.

- Pattern identification: Machine learning methods use mathematical and statistical methods to identify patterns in data. Manually finding patterns in data is incredibly time-consuming, and is fraught with human error—if the patterns can be found at all. Algorithms such as classification or clustering can easily find patterns to separate different classes. One of the major benefits of these approaches is that they're able to classify data that they haven't previously seen. For example, if you're working on fraud detection and have an instance that your model hasn't been trained on and so isn't familiar with, the algorithm is still able to identify this instance as an outlier that may be fraudulent.

- Optimization: With the fast-moving, globalized economy and rapid growth of online platforms, there's an ever-increasing need to handle all aspects of the financial industry more quickly and accurately. To replace the manual approach, two main solutions are used by organizations. One is a traditional rule-based approach, and the second is an ML-based approach. In the rule-based approach, the creation of rules is handled manually, which is difficult and time-consuming, and struggles to account for edge cases. ML offers a different approach, with computers assisting humans in reaching a decision. A human still needs to prepare the data, which will then be input into the ML model training process. The end result is a model which outputs the rules used to make decisions. Because these rules aren't manually crafted, there's a substantial savings of time and labor, as well as a greatly reduced chance of error.

- Continuous improvement: For many real-world use cases, such as fraud detection or object detection, data distribution changes over time. This can be in the form of data or concept drift. If your solution isn't able to handle those, you'll need to manually intervene to incorporate the changes. If, on the other hand, you're using ML- or DL-based solutions, manual intervention is likely unnecessary. With proper model monitoring systems in place, the system can retrain the model to account for the drift, allowing it to maintain accuracy.

Finance Use Cases with ML and DL Solutions

Now that you are aware of what machine learning and deep learning are, let's look at some of their major use cases in finance.

Loan Risk Evaluation

Loan risk, sometimes referred to as credit risk, is the likelihood that a client will fail to repay loans or credit card debts, or to otherwise meet contractual obligations. This is a costly issue for banks, venture capital firms, asset management companies, and insurance firms globally. Identifying the risk profile of a customer manually is a complex and time-consuming task, and although automation in the form of online information verification has been introduced to the process, it is still difficult to identify the risk profile of a customer. This is the reason these firms are now turning to ML and data science solutions to solve this issue for them. ML and DL solutions help organizations identify credit risk of potential customers, allowing them to be segmented into interest rate bands.

ML-Based Solutions for Loan Risk Evaluation

Loan risk evaluation is a binary classification problem as you would have only two outputs, classes "0 (No Risk)" and "1 (Risk)". Since it's a classification problem, there are many models that are well suited to this task. Organizations working on this issue have found that ensemble learning models such as random forest classifiers and the XGBoost model have the best performance.

In loan risk evaluation models, most of the use cases that you work on will have inherently imbalanced data—there will always be more no-risk samples than there will be risky ones. This means that model accuracy can be difficult to assess. For example, suppose you test your model on a hundred rows of real-world data, and that data set has ninety samples that are no risk, and ten that are classed as risky. If the model reports them all as no risk, the model's accuracy is ninety percent, since all of the negative samples were flagged correctly, even though none of the positive ones were. But this model would be useless in action, because it doesn't successfully identify the risky loans. Development strategies and metrics used to assess your algorithms need to be carefully considered in order to account for any natural bias in the data.

Customer Analytics

Customer analytics is the process of analyzing customer behavior and preferences. Data about customers contains information like their purchase patterns, geographical information, and transaction types. This helps businesses derive information such as their common customer demographics and customers buying behavior, which informs their business decisions. Both ML- and DL-based solutions are used for customer analytics. Some of the major examples of customer analytics are as follows:

- Customer segmentation: Customers can be grouped into segments based on information such as demographics or behavior. By identifying these segments, organizations are better able to understand what their customers are looking for, as well as what segments of the market they're not reaching. Clustering algorithms, especially k-means clustering, are used to create behavior-based customer segments.

- Churn analysis: This is the process of identifying the rate at which customers discontinue their use of your service, and may also include why they're discontinuing. This is a binary classification problem, for which ML models like logistics regression, decision trees, and ensemble learning models are used.

- Sentiment analysis: Sentiment analysis is the process of identifying the customer's satisfaction with a product or service. Sentiment analysis uses text analytics techniques, which are techniques that make it possible to analyze and extract useful information from text data. Both machine learning algorithms such as SVM and naive Bayes and DL algorithms such as RNNs (Recurrent Neural Networks) are used for this.

- Recommendation system: A recommendation system is a module that is used to recommend products or services to them that they are likely to want. Recommendation systems use approaches such as collaborative filtering and content-based recommendation.

Pricing and Offers

One crucial consideration when an organization launches a product or service is the price point at which it should be offered. Many organizations have dedicated teams that work on creating different offers for different customer segments, but to improve the results and provide a faster way forward, machine learning is increasingly used to create these offers.

This can be achieved using clustering algorithms that help cluster similar products. For example, if an insurance company is launching a new product, a clustering algorithm can be applied to all the products the company offers, creating groups of similar products, one of which will contain the new product. The company can then use the price points of products in that group to inform the pricing of the new product. Clustering algorithms can also be used in a similar way to create offers for specific customer segments.

Claims Adjudication

Claims adjustment is the process of settling—including paying or declining to pay—a claim raised by a policyholder. For example, if someone has a vehicle insurance policy and they have an accident that results in damage to their vehicle, they'll make a claim to their insurance. A representative of the insurance company assesses the claim and any supporting documentation, such as police reports, photographs, and information from their claims adjuster.

If the insurance company finds the claim is genuine, they adjust the claim by paying the appropriate amount, otherwise they reject it. All insurance claims, regardless of the type of insurance, follow a similar process, which is historically done manually. Often it takes a significant amount of time, and has a high financial cost due to the need to visit the site of the claim.

In recent years, insurance companies have turned to a different solution for claims adjustment: computer vision, which is a branch of deep learning. Many major insurance companies now use drones to take images of the home for which the claim is filed. Then, multiple deep learning-based object detection and classification models are run on the images to identify the type and severity of the damage based, which is then used to process the claim.

One such company is Topdanmark, a large European insurance company. After moving to the Domino data science platform, Topdanmark was able to use image and natural language processing techniques to reduce the approval times from four days to mere seconds. The models are eight hundred times faster than humans, and they’re now responsible for sixty-five percent of all claims.

Flagging Fraud

Financial fraud has always been one of the major issues for finance-related organizations. According to the FBI's 2021 Internet Crime Report, a record-breaking 847,367 complaints of internet fraud were filed in the United States alone, leading to a loss of $6.9 billion USD. These fraud claims were related to a range of subjects, including credit cards, loan repayment, false claims, identity theft, and document forgery. However, internet fraud cases are just one aspect of financial fraud. Unfortunately, both online and offline fraud are incredibly widespread in finance and insurance. Some common types of financial fraud are:

- false insurance claims,

- identity thefts,

- embezzlements,

- pyramid schemes,

- dishonest accounting,

- as well as numerous other increasingly creative ways to commit financial fraud.

The traditional way of handling fraud is to use a rule-based approach. In this approach, a team of data engineers manually creates rules that can segment fraudulent and non-fraudulent transactions. However, manually creating rules that cover all fraud scenarios is impossible, and as your data grows, maintaining a comprehensive ruleset becomes resource exhaustive and complex.

More modern approaches to fraud detection are handled using both classification and clustering algorithms. Companies want to be able to identify fraudulent use in real time, enabling them to prevent the fraud before it occurs.

One of the most common types of fraud is the one associated with credit cards. Given the huge number of credit card transactions, detecting credit card fraud is a serious challenge. Machine learning is probably the optimal way to solve such a challenge, and in this case study, you’ll see what it takes to build a machine learning model for credit card fraud detection. The steps are similar to building any kind of machine learning model: you start with reading the data set, then you do exploratory data analysis, followed by data cleaning and feature engineering. One specific problem you need to handle is dealing with the imbalance in the data set caused by the fact that the large majority of credit card transactions are genuine, while only a small percentage are actually fraudulent.

After you handle the imbalance in the data set, you can proceed with training and evaluating the model. Once you’re satisfied with the results, it’s time to deploy the model and use it to detect real-life credit card frauds. Contrary to what you may think, the process doesn’t end here. The deployed machine learning model needs to be continually monitored and regularly retrained, generally with the help of an MLOps platform.

Economic Modeling

The economy we live in is incredibly complex, with countless intertwined economic agents in it. And in order to affect or move the economy in the way we desire, we first need to understand it, despite its complexity. This is where models come in. An economic model is nothing but a simplification of the real-world economy. Usually, there’s an outcome variable that the economic model is trying to explain, as well as independent variables that affect the outcome variable in some way that we want to understand. Technically, a model is a mathematical representation of different variables that shows the logical and quantitative relationship among them. This could be related to any business, though it is most frequently used in finance and is used to derive business insights from the data.

For example, if you want to forecast the GDP growth rate, you can build a model where the outcome variable is the GDP growth rate itself, and the independent variables are all the factors that affect it, such as the consumer confidence, inflation rate, exchange rates, and numerous other factors. Of course, the model can’t forecast the GDP growth rate perfectly, but it can still be incredibly useful. That’s why organizations such as the World Bank, IMF, and European Commission regularly create such models.

There are no standard machine-learning algorithms for economic modeling, as the models required vary greatly depending on the organization and the type of data they have. Some nearly universal steps, though, are data preprocessing and feature engineering, which can reveal a great deal about the data. The processed data can then be used by any machine-learning algorithm to perform whatever task the organization requires.

Financial modeling is closely related, with financial models being a simplification of real-world scenarios in finance. Financial models are indispensable for any serious financial institution, and they’re at the core of the whole financial services industry. This is why improving the model development process is of utmost importance for a financial institution. One of the ways to do that is by using a platform such as the Domino Data Science platform. By using this platform, Moody’s Analytics, a leader in financial modeling, increased the efficiency of model development, reduced the model development to deployment cycle from nine to four months, and managed to move models into production six times faster.

Trading

In finance, trading is using money to buy or sell shares of a company. On the stock market, different shares are bought and sold every day, and machine learning makes its mark here as well. Due to the nature of the global economy, which is heavily influenced by unpredictable political and natural events, no model can predict every fluctuation of the market. They can, however, give you indications about market movements.

Trading is generally a time-dependent process, and is classified as time series analysis. There are different ML-based solutions, such as support vector machine-based prediction models and RFC-based prediction models, that are commonly used in market analysis. These models sometimes underperform in real-world situations with sudden market changes, as they make time-dependent predictions based entirely on past data.

A more effective solution is one that can handle an enormous amount of data, but can also process news and other events that can affect the market. For this reason, Recurrent Neural Network (RNN) models are generally preferred, as they can both handle text data and model time-dependent features.

Just like in many financial applications, machine learning is being increasingly used in developing trading strategies. Combined with algorithmic trading, machine learning can create some really powerful trading models.

Algorithmic trading refers to coding a program which will follow certain trading instructions about when to buy or sell securities on the market. It has been used for decades, historically with a manually coded set of instructions. However, machine learning is increasingly used to get to that set of instructions, which will include instructions about whether to buy or sell, the price points at which an asset is overpriced or underpriced, how many securities to buy or sell in a certain situation, and other important trading instructions.

In the process of developing and deploying trading strategies, an MLOps platform such as Domino’s is virtually a must. For example, Coatue Management, a global investment manager, uses Domino to enhance their investment research process. Domino’s platform has enabled Coatue to increase their productivity, achieve significant operational savings, accelerate their research cycle, and to deploy models in production.

New Product Creation

This is a use case that many industries are focusing on right now. Using the information about the previous products, organizations are using machine learning to come up with new products. One great example of this can be the creation of new insurance policies for the customers based on their geographical information.

Machine learning can be used to improve many stages of the product development process. Using machine learning models, companies can identify necessities for new products. Even simply analyzing customer reviews using natural language processing can give companies ideas for new products, improvements to existing products, or ways to improve their business. In addition to identifying new product ideas, machine learning can also help with the design of a concept for new products.

Operational Efficiencies

Machine learning helps companies optimize many tasks, allowing them to improve the customer and employee experience, and also to improve the efficiency of their operations. To use the banking industry as an example, it's only in recent years that tasks like opening accounts, seeing transaction details, and applying for loans could be done nearly instantly online, rather than by speaking directly to someone and waiting for a response. With advanced technology like ML and DL, all these things are carried out online very efficiently.

There is no predefined ML model that works for improving the operational efficiency throughout different industries, but almost all algorithms ultimately improve efficiency. For example, in the finance sector, an online user registration and verification process has been adapted. In these processes, the user submits a selfie and their ID card, then algorithms such as optical character recognition, vocal recognition, and face match are used to validate the user information. Usually, neural networks like CNN and RNN are the heart of this user verification process, while other supervised and unsupervised algorithms are used to help organizations improve their existing solutions.

Insurance Underwriting

In insurance, underwriting is the process of identifying the risk associated with the business that is to be insured. The insurer analyzes these risks, then finalizes the cost of the insurance policy for the business. As with many tasks discussed in this article, this was once a manual process, but insurance companies now rely on ML- and DL-based technologies to enhance the underwriting process.

Machine learning has three primary applications in the underwriting space. The first one is to analyze someone's claim risk. In claim risk use cases, companies try to establish the likelihood that the policyholder will file a claim. Usually, this results in a binary classification issue where the positive class indicates someone likely to file a claim, and the negative class represents a customer who's unlikely to file a claim. The second use is to identify the severity of the incident. This is a multiclass classification issue, with categories like low, mid, and high. The severity affects the amount that the claim will pay out. Finally, ML is used to generate insurance quotes based on the customer's profile. This is the combination of business rules that incorporate the outputs of multiple supervised learning algorithms such as classification, clustering, and churn analysis.

Challenges of Using ML and DL in Financial Services

Challenges of machine learning are usually related to things like the model not performing well, poor feature engineering, user adoption, and/or bad data. In finance, not only do you have these issues, but you have additional challenges that must be addressed.

Regulatory Requirements

The finance sector is highly regulated, and there are many laws governing the collection, storage, and use of data; lending practices; and consumer rights. Regardless of whether decisions are being made by a manual process or an automated one, these requirements must be tightly adhered to.

One common regulatory requirement is that banks and other financial institutions must be able to explain to the consumer how a decision was reached—that is, the institution must be able to cite the specific primary reasons for which a loan was declined or an interest rate increased. This can make the use of complex, multilayer neural networks problematic in regard to regulatory compliance, as the increased complexity of the models can make it difficult to pinpoint specific factors that affected the outcome.

Another key regulatory requirement is that financial institutions must be able to reproduce the results of any model. In order to be able to do this, all the factors that affect model results (such as the data, code, and any tools used) need to be documented. However, machine learning models can be extremely complex, with numerous elements affecting model results. Because of this, documenting the model development is virtually impossible without a proper system in place.

That’s where an MLOps platform (such as Domino’s enterprise MLOps platform) comes in. It includes a powerful reproducibility engine that automatically tracks changes to code, data, or tools. This makes it easy for organizations to quickly reproduce the model results whenever they need to do so. Allstate, a leading insurance company, successfully uses Domino’s MLOps platform to document and reproduce work for both research and auditing purposes. Before moving to Domino’s platform, it took Allstate months to recreate existing models and to answer questions from regulators.

Bias

Anti-discrimination and other consumer rights laws need to be considered and built into your model from the very beginning. In the United States, the Equal Credit Opportunity Act makes it illegal to discriminate against a number of personal characteristics; in the European Union, the General Data Protection Regulation (GDPR) creates a legal obligation for lenders to have mechanisms that will ensure there are no biases in their models. While it's relatively easy to ensure that your model doesn't directly consider, for example, race or gender when evaluating a customer, it's also important to ensure that it doesn't consider things that can be proxies for these classes, such as living in a specific area, or having attended a specific school. Models should be tested extensively to ensure that they don't introduce illegal bias to the decision-making process.

The potential discrimination or bias that could be brought by machine learning models creates reputational risks for financial institutions. Even some of the largest companies in the world have come under public and regulatory scrutiny due to discriminating models. One example is that of Apple’s credit card, launched in 2019. However, shortly after its launch, users noted that the model was sexist. Women were assigned lower credit limits compared to men, even in cases when they had a higher credit score and income. Another infamous example is Amazon’s model used for hiring employees, which was later found to be strongly biased against women. The model being trained on historical data, in which resumes submitted by men greatly outnumbered those submitted by women. The model picked up this bias and learned to favor male candidates.

Data Access and Governance

The use of data in the financial services has several complications that are less common in other industries. Financial institutions often have data siloed by product, which makes it difficult to provide models with comprehensive data sets. Additionally, there are extensive regulations that govern the storage, use of, and access to this data, though the scope and strictness of those regulations vary by country.

In financial services, it's even more important than usual to ensure that your data is accurate, current, clean, unbiased, fully representative of the population, and in compliance with data locality regulations. Failure to do so may result in not just an inaccurate model, but in charges against your company and steep fines from financial regulators. When it comes to data-related fines, many of those are due to data breaches. However, under the Fair Credit Reporting Act, financial institutions may also be subject to fines due to not being able to explain the model results—for example, not being able to explain why a loan application was denied.

Due to the highly sensitive nature of financial data, it's also important—and often legally required—to limit access to the data. Data teams should work with anonymized data whenever possible, and care should be taken to ensure that the privacy of the consumers remains a paramount consideration throughout the process.

Legacy technology

There may be some use cases where you need to build not just one model, but several models that are dependent on each other. In this situation, there are two primary considerations: one is the overall accuracy of the system, and the other is how you're going to connect the various processes. Machine learning and deep learning can require extensive hardware architecture to successfully train models, and legacy systems often don't have the capacity flexibility to handle data-processing requirements or the deployment requirements.

Model Risk Management

These days, financial institutions rely heavily on models. Banks, insurance companies, and other financial institutions may have hundreds or even thousands of models in production at any given time, and that number keeps increasing substantially with each passing year. And it’s not only the number of models that keeps increasing, but their complexity increases too, with machine learning and deep learning techniques becoming more prominent.

The high number and complexity of models used in financial institutions create a lot of risks, since any one of those models not working as it should may cause significant damage to the institution. Risks can come from the models being faulty themselves, but also from correct models that are being misused. Whatever the reason, flawed models or their misuse can create notable issues, such as financial losses or poor business decisions. An infamous example is that of JP Morgan, which in 2012 suffered losses of more than $6 billion due to model errors. Flawed models also played an important role in the financial crisis of 2007-2008.

Since the risks related to using models can be significant, a need emerges for a framework that will manage those risks. That’s where model risk management comes in. Model risk management frameworks involve the monitoring of risks that arise from potential faulty models or the misuse of correct models. Such a framework is needed both to comply with strict regulatory requirements, as well as to minimize the losses that may come from model failures. Every financial institution that uses quantitative models for decision making needs to have a robust model risk management framework in place to supervise the risks that come with the models.

The best way to implement a model risk management framework is through an MLOps platform. MLOps entails the development, deployment and monitoring of machine learning models in production in the most efficient way possible, and is crucial for scaling complex data science processes.

Model Governance

Model governance is a framework by which financial institutions try to minimize the model risk explained above. It’s a subset of the larger model risk management framework. Model governance encompasses a number of processes related to the model, from its beginnings to being used in production.

The model governance processes start even before the model is developed, by pinpointing the people that should work on a model and their roles within it. While the model is developed, all versions of it have to be logged. And when the model is deployed, model governance should ensure that it’s working properly and isn’t experiencing data or concept drift. Most of these processes will be handled by an MLOps platform.

Having a strong model governance framework in a financial institution will maximize the quality and effectiveness of models, while simultaneously minimizing their risk. When a model isn’t performing as it’s supposed to, a good model governance framework should quickly recognize that. It should also handle the challenges of using machine learning in finance, such as concerns about bias and compliance with regulatory requirements.

Many companies use Domino’s MLOps platform in their model governance framework. For example, DBRS, a top-four credit rating agency, deployed Domino as the central platform for all of their data projects after seeing Domino’s governance capabilities. Another example is that of a leading Fortune 500 insurer, which benefits from the automatic tracking and full reproducibility of experiments, data sets, tools, and environments that Domino’s enterprise MLOps platform offers.

Model Validation

Model validation is a crucial part of the model risk management framework. It deals with ensuring that the models are performing as expected and that they’re solving the problems for which they were intended. Model validation is done after a model is trained, but before it’s put into production. However, validation doesn't stop there. The model is also validated immediately after it’s deployed to ensure that it works as it should in production. After that, model validation to confirm that the model still works at the expected standard should be performed frequently for as long as the model is in production. Model validation should be done by a team different from the team that trained the model to ensure that the results won’t be biased.

Model Deployment and Monitoring

Building, deploying, and monitoring ML/DL models is highly technical and detailed work that is impossible to do at scale with manual processes. Domino Data Lab’s Enterprise MLOps platform is architected to help data science teams improve the speed, quality, and impact of data science at scale. Domino is open and flexible, empowering professional data scientists to use their preferred tools and infrastructure. Data science models get into production fast and are kept operating at peak performance with integrated workflows. Domino also delivers the security, governance, and compliance that highly regulated enterprises expect.

These capabilities help financial institutions create model driven solutions for use cases such as:

- Underwriting and financial scoring: Domino helps insurance organizations, including Fortune 500 global leaders, to identify, test, and manage risk across the entire portfolio including underwriting, pricing, fraud detection, and regulatory compliance.

- Fraud Detection: Domino helps you create different classification and computer vision based solutions to detect fraud in banking and insurance. It can help organizations identify fraudulent claims or transactions, and report them at runtime. Even a leading Fortune 500 insurer was able to improve fraud detection, risk management, and significantly reduce cycle times by using Domino’s platform.

- Personalization: Domino can help you to analyze data about individuals efficiently, based on which you can create different personalized offers and products for different customers. Gap used Domino to increase customer engagement by offering more personalized experiences.

These are just a few of the solutions, you can do a lot more in ML and DL space with the help of the Domino Enterprise MLOps Platform.

Conclusion

In this article, you've learned about some of the ways machine learning and deep learning are helping businesses in the financial services improve their existing solutions or create new ones. As a highly regulated, high-stakes sector, the finance sector is particularly vulnerable to small mistakes in models that lead to massive consequences.

Gourav is an Applied Machine Learning Engineer at ValueMomentum Inc. His focus is in developing Machine Learning/Deep learning pipelines, retraining systems, and transforming Data Science prototypes to production-grade solutions. He has consulted for a wide array of Fortune 500 Companies, which has provided him the exposure to write about his experience and skills that can contribute to the Machine Learning Community.