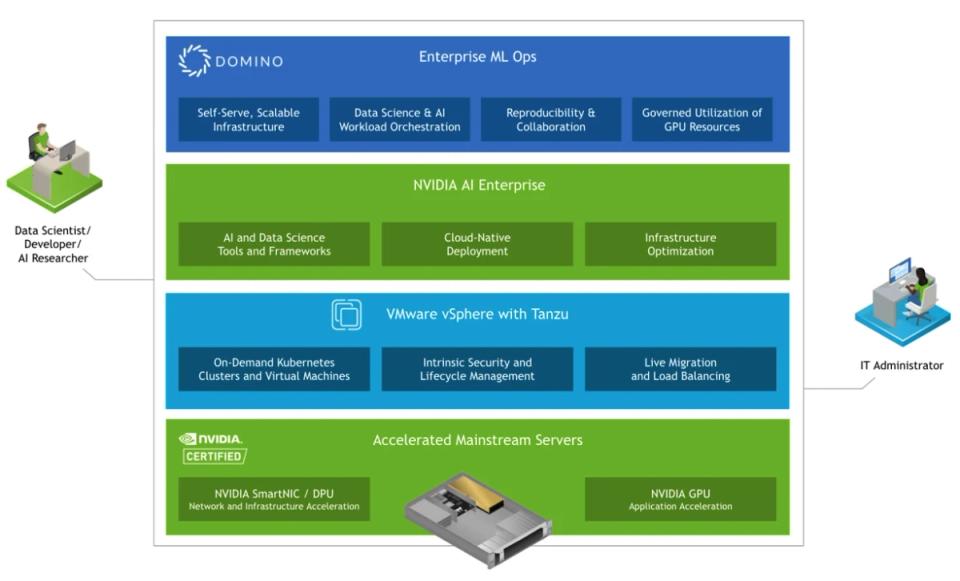

Provide self-serve access to infrastructure

Launch on-demand workspaces with the latest NVIDIA GPUs, optimized with open source and commercial data science tools, frameworks, and libraries - free of dev ops.

Attach auto-scaling clusters that dynamically grow and shrink - using popular compute frameworks like Spark, Ray, and Dask - to meet the needs of intensive deep learning and training workloads.

Data scientists can focus on research while IT teams eliminate infrastructure configuration and debugging tasks.