Fuzzy Matching to the Rescue

Domino2017-11-01 | 30 min read

Jennifer Shin is the Founder & Chief Data Scientist at 8 Path Solutions. Jennifer is on the faculty in the data science graduate program at UC Berkeley, on the Advisory Board for the M.S. in Data Analytics program at the City University of New York, and teaches business analytics and data visualization in the graduate program at NYU. At a Data Science Popup, Jennifer delivered an introduction to approximate string matching, or fuzzy matching. This blog post covers a session summary, a video of the entire session, and a video transcript of the presentation. If you are interested in attending a future Data Science Popup, the next event is November 14th in Chicago.

Session Summary

In this Data Science Popup Session, Jennifer Shin, Founder & Chief Data Scientist at 8 Path Solutions covered fuzzy matching, or approximate string matching.

A few key highlights from the presentation include:

- Delving into how fuzzy matching finds nearly, but not exactly, identical strings by quantifying how close one string is to the other.

- Use cases for fuzzy matching ranging from searching for a list based on relevance to providing recommendations to replace potentially misspelled search words. This approach is particularly useful in a world where alternative spellings, inconsistent formatting, and misspellings can limit the usability and value of the information contained in large data sets.

- Examples were used to illustrate how fuzzy matching can be an ideal choice for entity and name matching, but a less than ideal for medical data, where the margins of error are too large.

For more insights from this session, watch the video or read through the transcript.

Video Transcript of Presentation

Hi, everyone.

How's everyone doing? You have a good lunch? you're awake? Not falling asleep on me, hopefully. OK.

I happen to also be the founder of 8 Path Solution, which is a data science, analytics, and technology company I started about six years ago. We just became a Box Technologies partner, which is pretty exciting stuff for us. I'm also a lecturer at University of California Berkeley. I teach as well. I teach statistics mostly, as well as data science.

Today I'm going to be talking about Fuzzy Matching. For those of you who don't know the topic-- hold on. [uses the slide remote control to modify the slide projection] Let's see. OK. Let's start out by thinking about why we're looking at Fuzzy Matching. Everyone's heard of big data. Big data being so important, there being such a huge amount of data that we all are trying to process, compute, makes sense of. And I'm sure for most of us, we're trying to navigate a big data world. What does it mean? What do we have to do? What is it, the challenges that we're going to face by looking in this space and looking at data?

I'm going to go through an introduction to fuzzy matching. Anyone who is not familiar with the topic, you will actually get a nice little introduction to it so you know what it is, why we use it, and then I'll go over a small use case that I've come across in my work. And in particular, it's a fuzzy solution for surveys. So that should help you have a better idea of—in a tangible real-world sense-- what it is we use fuzzy matching for. Like how do you actually apply it? Why is it useful? When do you want to use it? And then some real-world considerations. And if you're interested in actually using fuzzy matching in your work, how you can actually use it, and what you want to think about when you're implementing that sort of solution.

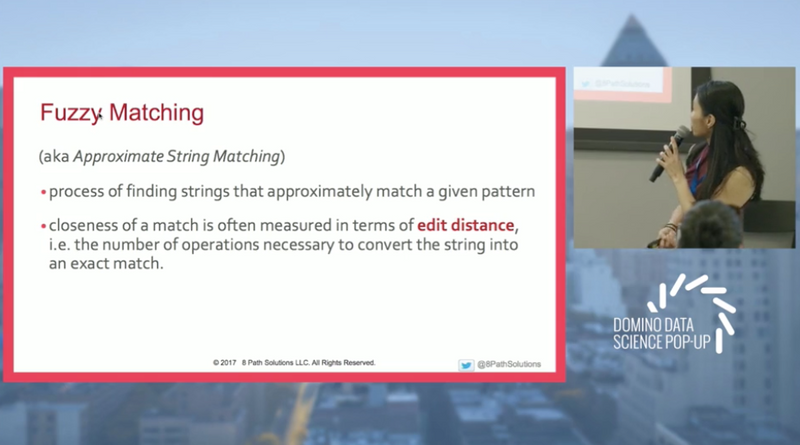

So what is fuzzy matching? For those of you who don't know, it's what we call approximate string matching. What does that mean? It's basically the process of finding a string that approximately matches a given pattern. Oftentimes, it might be a comparison between, say, two strings, and seeing how closely they match. And the idea of closeness, when we look at data, and we look at data science, we want to always quantify what we mean by these terms. There should always be a definition. It's not just a concept. We want to quantify what we mean by things like "close."

In this case, we measure in terms of what we call an edit distance. The edit distance is the number of operations necessary to convert a string into an exact match. It's a number of simple operations, or primitive operations, that we need to be able to get one string to match another string.

To give an idea of what that means, if you look at the first one, insertion. What does that mean? What does that mean, when we insert? It's pretty straightforward. It's what it sounds like. In this case, we have cot. and say we want to spell the word coat. We would need to insert the letter “a”, in order to be able to spell that word. So that's what we consider insertion.

Deletion, it's very straightforward. In that case, if we want to go the opposite direction, we would say, take out the “a”. And you can see in the first one, we have an “a” in there. And the second one we don't. And then substitution, of course, is exactly what it sounds like. We're substituting in an “s” in place of the “a”. You can spell other words by substituting characters in.

What exactly is this used for? If fuzzy search is done as a means of fuzzy matching program, which returns a list based on likely relevance, even though search argument words and spellings do not exactly match. It's like saying when you're searching for something, and it's not going to return an exact match of what you're searching for, not the exact term, but it might return something similar, or look for other similar words. And you may notice this when you Google something, that sometimes you didn't put in the exact word. But somehow it returned the values to you? That's where you're actually using fuzzy matching on the back end, to give you the results you're looking for.

So why do we need this? Well, in the big data world, you have to keep in mind that not all data is clean. That's one of the big ones… you've heard of dirty data. Not every organization is looking at data quality. That's especially the case if text is used. If you have a text field and someone enters in values, they can enter anything. In that case, in order to actually match the terms, that might be difficult because of spelling errors, misspellings, maybe alternative spellings. It could be punctuation. So, in that case, not all formatting is consistent. So again, if you wanted to search something and find an exact match, what you typically consider an exact match, it would have to match not only in just the words of the characters, but also formatting.

And you might notice this if you use Excel a lot. Have you guys used Excel? Sometimes you don't have this in number format, it won't read it as number. So again, formatting is not always there. Not all databases are structured. So for those of you who haven't heard of NoSQL databases, what you call unstructured data, unstructured data tends to be great. We collect lots of information on it. We can dump a lot of data in there. But because it's not structured, it can be very difficult to find what you're looking for.

Unlike traditional databases, where we have a set number of columns or a set number fields, in those NoSQL databases, you can actually append on anything you want later and in the future. Now, the flexibility is fantastic, in terms of if you're not sure what you're going to actually use the database for, or you're not sure what additions you're going to add, it's great. When you have to then go back in and find information, you have all this information, and it's not consistently collected. It can become a very big challenge. And of course, not all text is correct. That's another thing to keep in mind. And of course, people are not perfect. I think the last one is really the thing to keep in mind. We all make mistakes, whether or not we intend to or not. And so that actually causes a lot of issues, in terms of searching for things.

For instance, if you're looking up an e-mail and you're like, I swear this person emailed me, and you can't remember their email address, but you kind of remember their name, but you're not exactly sure what their last name is, in that case, and if you misspell that person's name, you have nothing. That's where fuzzy search can be very, very useful, very, very helpful.

So when can we use fuzzy matching? Well, it's really on a case-by-case basis. Depending on what type of data you're working with, depending on what you're trying to do, fuzzy matching can, or might not be, an ideal solution. In some cases it's great. But in other cases, where you really do need it to be an exact match, or the risk of a mismatch is a problem, you may not want to use it.

For instance, if you're working with medical data, there could be a lot of drug names are very similar but not exact matches. And maybe you're pulling the wrong one because your specification for what's considered correct is too wide. You're allowing too large a margin of error. In that case, this may not be an ideal solution.

For data cleaning, if you notice in this example, there's two that are duplicates. The first row and the third row. And then you see the second row is a duplicate, but not. Slightly spelled differently. Again, this is an example of where if you're cleaning data and trying to make everything consistent, which is also very important for aggregating data, and being able to collect things like statistics on it, then you want to be able to actually use some sort of algorithm to be able to automate that process.

Entity and Name matching, it's usually the typical example many people use. And one of the reasons for this, if you notice, like, Microsoft, Microsoft Inc, Microsoft Incorporated, Microsoft Company; these are all ways that people might reference Microsoft on the internet. But you want to maybe standardize, like we saw in the previous example, and have one instance of Microsoft in your systems. In that case, fuzzy matching can be great, because Microsoft is a brand, it's a company. It's easier to match that than say, just general text words. You have a smaller, more finite list that you're comparing against. And it's much more easy to actually have a differentiated result. A company name is less common than say, common words that we use.

Recommendations is where you'll often see this. For instance, in this example, if you misspell a word, it might say, maybe you meant to spell it this way. And then predictive text, as well. In this case, for instance, “apple” was spelled incorrectly. It might give you other options, with “apple” as one of the options. So again, these are examples of ways that fuzzy matching can be actually used when you work with data. And you can see that it actually involves any stage of the process of working with data. It can be processing the data. It can be pre-processing the data. It can be post-processing the data. It can be somewhere where you're actually showing the data and making recommendations to the user. Or it can be getting the data to a point where you can actually release it and have people look at it.

An interesting use case is actually with surveys. Suppose you have a survey, and you have lots and lots of data for the survey. And you get back the results. And when you think about a survey, as a person filling it out, it seems simple enough. You fill it out, you send it, you're done. Now, if you you're collecting surveys over a period of time consistently, say every six months, over time, as you decide that, OK, that question was great. But we really need to ask it slightly differently. Now, when you make these changes across the tons and tons of questions, what's happening is the results you get back now vary. It's slightly different. So in this case, if you noticed, the way that the responses are now returned, it's slightly different. The formatting is slightly different. The text is slightly different. In this case, on the left you see Light Users. On the right you see Light, just Light without the Users. The order in which the words and the terminology is there. That's also inconsistent. And then category, also, is inconsistent. So this is a good example of, if you're thinking of working with another data provider.

Say, at your company you want to integrate data or you're looking to get new data from another source. Unless you have written somewhere where they guarantee that they're going to return it to you in a specific way-- and generally, I doubt they'll actually do that-- you can be in a situation where you've purchased data that's great. But now it's coming back to you every time slightly different. You don't have control over how they give you the data. They only promise the delivery of it. And now you need to account for the fact that you need it to be consistent on your end, to be able to offer it to your clients for services.

In this case, you want to be able to see how similar is A to B. So, for one or two questions, it's not a big deal. Now, if you have hundreds of questions, then for each response, that's another answer. So now you multiply that by 5. And then it starts to get much, much bigger.

So in this case, we had a survey that changed every six months. And the questions would get reworded. Which again, in a real-world sense, makes sense. You think about the question, it was answered well. But you have a better question, so you want to improve on your survey. And the most questions were generally the same. So they might be reworded. Or in most cases, in 80% of the cases, there are no changes. The issue with this, of course, as you saw, is that it's raw and messy data. The data, there's no consistency in formatting. There's no consistency in the way that you're getting the response back. And it also required, of course, updating a data dictionary, or some sort reference. It doesn't necessarily have to be a data dictionary, but some reference point that ties in your previous period with your current period.

So let's look at some examples of this. It's in the past what we had done was to look at a word-based comparison. So you'll see here, an old label and a new label. And basically, what it did was count how many words are in both labels. Simple, right? What's the easiest way to look at this? Well, let's say we check for each word on the old label and see if it's in the new label, or vice versa. In this case, we have six words. So six words, and we said OK, let's add a threshold. We have to figure out some way to quantify what we consider to be a good match. So, in this case, we want to say, five is a good match. By that standard, we could say, this is a good match. Five is the threshold. It's a higher score than five, so we should be good. If you didn't notice where the words were, you can see it in red here. "Anxiety/Panic Used a branded prescription remedy."

So, what does this go wrong? Well, think about brand names. Brand names are something where it actually does matter, the spelling of it. For instance, also, whether or not the brand name exists also matters. So if in one instance a brand name exists, and in the other instance it doesn't, it can say, it's still a good match. You see a score of 7 here. And you have two different brands. By that threshold standard, it's a good match. But of course, if you're looking at it from a practical standpoint, it's not a good match.

Similarly, you see in the second one, it's a score of 9. It's 4 above five. 5. Great. Except for the fact that it does not have that brand name you need. So if you actually need to align the questions with brand, this is problematic. Another place where it went wrong is the numbers. Numbers matter here. When you're dealing with survey questions, the range of that you're providing in your answer is going to matter. If you're going simply on matching one label to the next label, and just literally on the count of how many matches there are, it might completely miss the number.

If you look here, these scores are even better than the last one. You have 11 and 12. you'll see in the first example, it's $75 to $149. versus on the right side, you see it's $50 to $74. That's a big difference, right? It's a really big difference in where that response is given. On the second one, you'll see it's 8-plus, versus 3-plus, again. There's a huge difference between 3-plus and 8-plus. And then the third one's a little more subtle. The third one, you see it's a 2-plus, versus on the right side it's a 2. But anyone who knows math, you know that equal to and greater than or equal to are very, very different things. Typically you want to know if there's that plus there.

So how do we actually approach dealing with this issue? It was obviously, doing a direct match, what we consider a more classical matching solution, where you look up one word, find another word, doing it that way, is great in a rudimentary way. But we have better technology now. We have better options now. And ideally, we need to find a smarter solution. Because ultimately, if we miss these things, someone has to go in and manually go and correct this. In this case, someone has to actually go in and change this every two weeks. Or it took them two weeks to change it every six months. So that's a long time that someone's looking at this and actually matching. Because it was about 20,000 rows they had to match. And when you think about the 80%, 80% of the questions are the same. Well, they have to actually match those as well. You have to filter out the ones that match, on top of the ones that don't match, and then match the ones that don't match, and see how many are new versus how many exist. So it's not just looking at changes. It's also looking at deletions and additions as well.

So Levenshtein's Distance, it's one approach for fuzzy matching. Fuzzy matching is a general concept. So you can have different kinds of distance measures, different ways you can actually use fuzzy matching. In this case, if we look at this one, we actually are seeing let's look at the distance between two words as the minimum number of character edits, single character edits. For instance, if you saw, in the previous example that we had with the definition, we had insertions, deletions, and substitutions. Each of those, we count as one, for each character that you're changing. There's other methods you could use, like Hamming Distance. In that case, you're actually comparing how many are equivalent, how many characters are equivalent. And then other ones as well, in addition to these. But in this case, we looked at Levenshtein's Distance. So this is the formal definition.

So again, we want to quantify these things. It's nice as a concept. But to actually find the value and be able to use it with data, you need to be able to quantify it in a way that you can define it, be consistent, and have it actually make sense. OK. So-- [INAUDIBLE – question from the audience] Yeah, so in this case, if one of them is 0, then you're just going to take the max of the 2, right? But You see on the right side that if the minimum of these 2 are 0, if there's one that's a 0, then you take just the maximum. And otherwise, you're taking, again, the minimum number of edits.

So let's see what this actually means. We have these two words we're trying to match. How do we actually match these words? How do you convert the first word to the second word? In the first case, in the first row, you'll see that we can add it. The first two are the same, “S” the same. “M” is the same. Now, you need to convert “T” to “M”. So you'll notice that we changed “T” to “M”. And then we look at the fourth one, which is a “C”. And then, of course, then that doesn't match the “T”. So we have to change the “T” to a “C”. Then again, we have to change the next one from an “H” to a “C”. And then the “G” to an “H”, and then a “Y” to a “G”. So then you end up having the first word-- all the way on the left-- now, converting to the last word, all the way to the right. Now, there's better ways to do this.

In the second row, you'll see that we can just insert an “M” and delete a “Y”. Easier, faster way. This is an example of there's more than one way that you can edit this word. There's more than one way you can actually get to what you're trying to get to. And in this case, what we're looking at is the minimum. We're actually going to say, “we're defining this number, the actual distance, as the minimum number”. So, that's ultimately what helps us actually be able to use this sort of methodology and not have there be a conflict. So we had an intern, who actually looked into this. And he came up with the idea of, “why don't we compare and use this fuzzy matching solution? Not on a word-by-word, but cell-by-cell.” So looking at the structure of the different responses we had, and then leveraging that.

You'll see here we have two different options; a previous one and a new one. And social networking, LinkedIn, how important to you? Keep in touch with family/friends, and not at all important. You notice it's slightly different than the one on the right, where the order is different from the last two sections. So here you'll see that if you actually take the new label, all the way at the top row, and then the old labels, if you put that on the left, and then each of these you can look at how many do we insert? How many do replace? How many do we delete? And then the ones in red are the ones that are the minimum. So in this case, we had it such that for the second and third part of the label, they're actually the same. it's just reversed order. . Which is why we end up seeing 'insert' 0, 'replace' 0, 'delete' 0, because they're a match. They're just in different order. It allows you to not just look at the edits, in terms of the actual word, but actually leverage that to be able to see the order of the entire terms.

And then another thing you may notice, you'll see that if you look at the actual examples, it's slightly different when we actually convert it into the text. That's something to keep in mind. We got rid of the spaces. We got rid of the punctuation. So when you're actually using something like fuzzy matching as a real solution, you may have to convert the data in such a way that it makes sense to be able to leverage the algorithm.

So a real-world consideration you want to think about is the data suitability. As I mentioned earlier, it depends on what kind of data you're working with, whether or not fuzzy matching is a good solution to use. The process design, as you saw, it's not just simply just taking the data and just looking at it and comparing. You saw that we had to strip the words, get rid of the spaces, get rid of the punctuations. You're able to convert it into a format where you can actually leverage the algorithm.

Validation methodology is another one. For instance, after you run the algorithm, how do you know it worked correctly? Because you shouldn't just assume, OK, I ran the algorithm. It's great. It may not be so great. There's instances where the match is like you saw with brand names. Maybe it's missing brands. So you have to find some way to validate that the results are accurate.

And then computing resources, and that's really the ones you have to keep in mind. When you do an exact match search, it just looks through each of the instances. It looks for a word, goes through every row, and tries to find it. When you're doing fuzzy matching, though, don't forget, it's not just looking at each instance. It's actually converting it and then looking for those instances. So compute is actually going to be much more important to be able to get your result on time. So say, like, your process currently takes you a day. Well depending on what kind of data you have, it could take two weeks. It could take three weeks. So you have to keep that in mind when you do this. Because otherwise you might go, “I have a great solution for you guys. I'm going to improve the way that we do this,” and never have a deliverable on time. So we really want to think about computing resources. Do you have the resources to do this?

And for anyone who's actually interested in being able to see this in action, if you have Python, if you're a Python user, there's something called fuzzywuzzy. That's pretty popular. You can use fuzzywuzzy. Evidently it's not just a bear. It's a Python algorithm. So again, it's simple. Simple to use. If you're an R user, you can use string distance or agrep, approximate string matching, fuzzy matching. And it's a slightly different approach. And you'll see, in the string distance, you have more options. As I mentioned, there's Hamming. There's some other options you have for distance measures, whereas the second one, you only have one.

So if you're a Spark user, there's a couple of ways you could go about it. You could, in theory, write the definition in Python, of how to actually calculate this. Do it in Python. You could do it in Scala.

Or we could just go a little bit more directly and just use the Spark function itself. Which it comes with this distance edit. So it's a little easier if you do it this way. And you see you just import it in the function. And I believe it's part of when you actually install it. It should be there. So is the format. And the first where you'll see that, that's how you import in the function, to be able to use it. In the second one we're saying, OK, let's create a data frame. So we're going to create a data frame. And say we're going to insert these two words that we're comparing, and find an “L” and an “R”. And the “L” and “R”, based on the words. And then we're going to select in the third row. We select based on that.

So, as an example, if you see on top, you have two different words. And we can actually run that. So again, you see the two words are put into the df0. And then there's an “L” and an “R”. The “L” and the “R” is not something you change. And technically, you can put in any variable. It doesn't have to be “L” and “R”. It can be “A” and “B”. Doesn't really matter. But then you'll see that's carried over to the next line. And then it'll output D1 equals 4.

So this is another example, kitten versus sitting. Same thing. You'll see just the words change in the algorithm. And then it outputs again, D1. But you can again, chance D1 to anything you want. Kitten and Sitten, this is the one. In this case we have, of course, one difference. The K and the S are the only difference. So for those of you who are interested in machine learning, IBM, I think they're having an announcement next week online, about some of their machine learning offerings. It might be of interest to you. So that's the link, if you're interested in that. You're welcome to email me if you have any questions. And I guess, if we have a minute or two, yeah. So feel free to ask a question if you have any.

Domino Editorial Note: This transcript has been edited for readability.

Domino Data Lab empowers the largest AI-driven enterprises to build and operate AI at scale. Domino’s Enterprise AI Platform provides an integrated experience encompassing model development, MLOps, collaboration, and governance. With Domino, global enterprises can develop better medicines, grow more productive crops, develop more competitive products, and more. Founded in 2013, Domino is backed by Sequoia Capital, Coatue Management, NVIDIA, Snowflake, and other leading investors.