How to Use GPUs & Domino 5.3 to Operationalize Deep Learning Inference

Vinay Sridhar2022-10-13 | 6 min read

Deep learning is a type of machine learning and artificial intelligence (AI) that imitates how humans learn by example. While that sounds complex, the basic idea behind deep learning is simple. Deep learning models are taught to classify data from images (such as “cat vs. dog”), sound (“meow vs. bark”), or text (“tabby vs. schnauzer”). These models build a hierarchy where each layer is based on knowledge gained from the preceding layer, and iterations continue until its accuracy goal is reached. Deep learning models often achieve accuracy that rivals what humans can determine, in a fraction of the time.

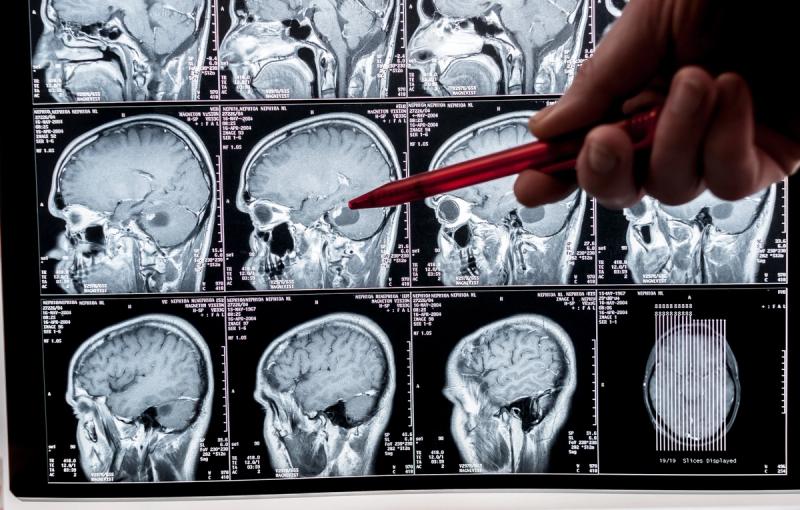

Our customers apply these same concepts in their work, but instead of using deep learning to tell the difference between a cat and a dog, they use advanced techniques to analyze pathology images to identify malignant vs. benign tumors, or analyze drone footage to find damage to roofs after a hailstorm. If you’ve ever received a call from your credit card company to verify recent transactions, that’s deep learning at work analyzing millions of transactions in real time to look for patterns that could indicate fraud.

Domino already offers the best environment for training advanced deep learning models, and there are numerous examples such as these use cases from Janssen and Topdanmark. With Domino 5.3, we’re extending our advantage in training deep learning models to enable the model-driven enterprise productionalize deep learning at scale, with no DevOps skills required.

Domino 5.3 for Faster, GPU-based Model Inference

With Domino 5.3, you can deploy your most advanced deep learning models and take advantage of the compute power of GPUs, instead of having to rely on slower CPUs. Those who take this approach realize a significant performance improvement for deep learning and other massively parallel computing applications. Studies have shown that GPUs are five to fifteen times faster for inference for deep learning applications than CPUs at similar costs.

By incorporating GPUs for model inference, companies can deploy models that rely on advanced frameworks such as TensorFlow and high-performance GPU-accelerated platforms such as NVIDIA V100 and NVIDIA T4. Normally, setting up a REST endpoint for a deep learning model requires a fair amount of DevOps skill to configure the compute resources (such as GPUs) to handle the expected load and processing requirements. Domino 5.3 abstracts this complexity away, so data scientists can deploy their deep learning model to a Domino Model API in just a few steps. As you’ll see below, they can select the number of compute instances along with the resources to be allocated, all with just a few clicks.

How to Deploy Models in Domino with GPUs

Domino offers admins an easy interface to define resource tiers that data scientists can use to size their deployments. Starting with a node pool that is configured with GPUs, an admin can define multiple tiers, each specifying the memory and compute configuration of an instance of the Model API.

Domino offers an easy, two-step process to deploy models as REST endpoints (Model APIs). When a user wants to deploy a Model API, all they need to do is select the compute environment and files to execute, then pick a hardware tier that contains the GPU. That’s it! Instead of having to learn complex DevOps skills or get IT involved to configure Kubernetes nodes and pods to make GPUs available for the hosted model, you can accomplish all this with a single click.

If users want to scale their deployment, they can select more than one instance. Domino ensures that each scaled-out instance gets deployed with its own GPU resources, as defined in the resource quota that the admin has set up.

Once users publish the model API, they can view and manage it from a centralized dashboard in Domino, which provides a single pane of glass to observe and manage all of their deployed models.

Generate Predictions from the Model API

Once running, the model API is ready to accept requests and put the GPU to work. The Model API has been set up to execute user-defined code that takes advantage of optimized hardware (like GPUs) to accelerate complex workloads such as inference (predictions) for deep learning.

Use GPUs + Domino 5.3 for Deep Learning Innovation

Domino 5.3 helps enterprise organizations operationalize deep learning by associating GPUs with deployed models to speed up complex inference processing. By abstracting away DevOps complexity and providing an avenue to take advantage of the latest innovation in the open source community, Domino provides data scientists a simple workflow to realize the business value of their deep learning projects.

There is amazing innovation happening in the open-source community around deep learning, including the BERT project on Hugging Face and pre-trained image detection frameworks such as the OpenMMLab Detection Toolbox. My Domino colleagues have published a number of detailed blogs on these topics; some of my favorites are this blog on Tensorflow, PyTorch, and Keras from earlier this year, as well as this blog about how financial services firms use deep learning. Whether you’re a beginner just getting started with deep learning or an advanced user looking for cutting-edge applications and ideas, I’d encourage you to visit the Domino Data Science Blog to learn more.

Vinay Sridhar is a Principal Product Manager at Domino. He focuses on model productionization and monitoring with an aim to help Domino customers derive business value from their ML research efforts. He has years of experience building products that leverage ML and cloud technologies.