A Guide to Machine Learning Model Deployment

David Weedmark2021-11-03 | 7 min read

Machine-learning (ML) deployment involves placing a working ML model into an environment where it can do the work it was designed to do. The process of model deployment and monitoring takes a great deal of planning, documentation and oversight, and a variety of different tools.

What Is Machine Learning Model Deployment?

Machine learning model deployment is the process of placing a finished machine learning model into a live environment where it can be used for its intended purpose. Data science models can be deployed in a wide range of environments, and they are often integrated with apps through an API so they can be accessed by end users.

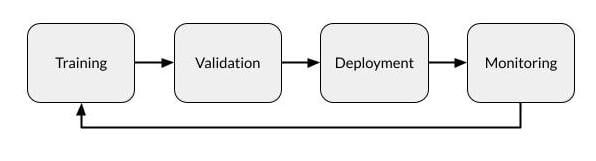

While model deployment is the third stage of the data science lifecycle (manage, develop, deploy and monitor), every aspect of a model’s creation is performed with deployment in mind.

Models are usually developed in an environment with carefully prepared data sets, where they are trained and tested. Most models created during the development stage do not meet desired objectives. Few models pass their test and those that do represent a sizable investment of resources. So moving a model into a dynamic environment can require a great deal of planning and preparation for the project to be successful.

How To Deploy a Machine Learning Model Into Production

Machine learning model deployment requires an assortment of skills and talents all working together. A data science team develops the model, another team validates it and engineers are responsible for deploying it into its production environment.

Prepare To Deploy the ML Model

Before a model can be deployed, it needs to be trained. This involves selecting an algorithm, setting its parameters and training it on prepared, cleaned input data. All of this work is done in a training environment, which is usually a platform designed specifically for research, with tools and resources required for experimentation. When a model is deployed, it is moved to a production environment where resources are streamlined and controlled for safe and efficient performance.

While this development work is being done, the deployment team can analyze the deployment environment to determine what type of application will access the model when it is finished, what resources it will need (including GPU/CPU resources and memory) and how it will be fed data.

Validate the ML Model

Once a model has been trained and its results have been deemed successful, it needs to be validated to ensure that its one-time success was not an anomaly. Validation includes testing the model on a fresh data set and comparing the results to its initial training. In most cases, several different models are trained, but only a handful are successful enough to be validated. Of those that are validated, usually only the most successful model is deployed.

Validation also includes reviewing the training documentation to ensure that the methodology was satisfactory for the organization and that the data used corresponds to the requirements of end users. Much of this validation is often for regulatory compliance or organizational governance requirements, which may, for example, dictate what data can be used and how it must be processed, stored and documented.

Deploy the ML Model

The process of actually deploying the model requires several different steps or actions, some of which will be done concurrently.

First, the model needs to be moved into its deployed environment, where it has access to the hardware resources it needs as well as the data source that it can draw its data from.

Second, the model needs to be integrated into a process. This can include, for example, making it accessible from an end user’s laptop using an API or integrating it into software currently being used by the end user.

Third, the people who will be using the model need to be trained in how to activate it, access its data and interpret its output.

Monitor the ML Model

The monitor stage of the data science lifecycle begins after the successful deployment of a model.

Model monitoring ensures that the model is working properly and that its predictions are effective. Of course, it’s not just the model that needs to be monitored, particularly during the early runs. The deployment team needs to ensure that the supporting software and resources are performing as required, and that the end users have been sufficiently trained. Any number of problems can arise after deployment: Resources may not be adequate, the data feed may not be properly connected or users may not be using their applications correctly.

Once your team has determined that the model and its supporting resources are performing properly, monitoring still needs to be continued, but most of this can be automated until a problem arises.

The best way to monitor a model is to routinely evaluate its performance in its deployed environment. This should be an automated process, using tools that will track metrics to automatically alert you should there be changes in its accuracy, precision or F score.

Every deployed model has the potential to degrade over time due to such issues as:

- Variance in deployed data. Often, the data given to the model in deployment is not cleaned in the same manner as the training and testing data were, resulting in changes in model deployment.

- Changes in data integrity. Over weeks, months or years, changes in data being fed to the model can adversely affect model performance, such as changes in formats, renamed fields or new categories.

- Data drift. Changes in demographics, market shifts and so on can cause drift over time, making the training data less relevant to the current situation and the model’s results therefore less precise.

- Concept drift. Changes in the end users’ expectations of what constitutes a correct prediction can change over time, making the model’s predictions less relevant.

Using an enterprise data science platform, you can automatically monitor for each of these problems, using a variety of monitoring tools, and have your data science team be alerted as soon as variance is detected in the model.

Model Deployment and Monitoring

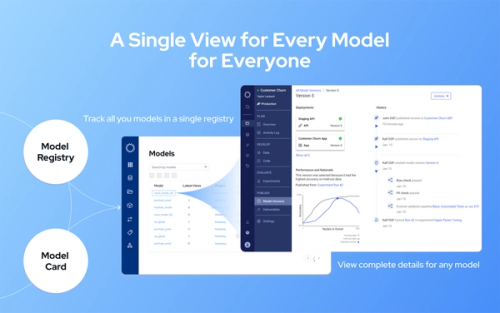

Successfully deploying and monitoring a machine-learning model requires a diverse range of skill sets and collaboration between many different people on different teams. It also requires experience and access to the tools that will help these teams work together efficiently. Model-driven organizations that successfully deploy models week after week rely on tools and resources all within a single ML operations platform.

David Weedmark is a published author who has worked as a project manager, software developer and network-security consultant.

David Weedmark is a published author who has worked as a project manager, software developer and as a network security consultant.