Kubernetes

What is Kubernetes?

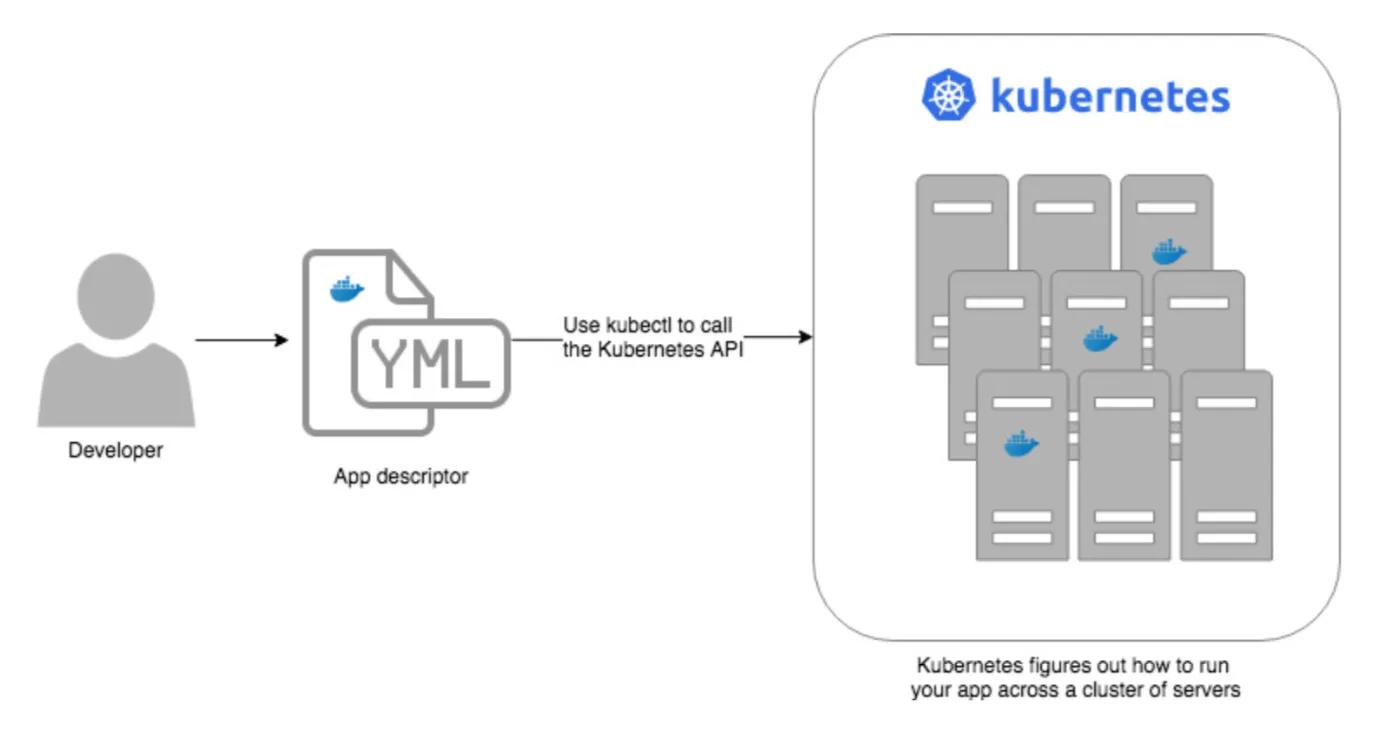

Kubernetes is an open source container-orchestration system for automating application deployment, scaling, and management. Kubernetes (aka, K8s) was developed to manage the complex architecture of multiple containers (e.g., Docker) and hosts running in production environments. K8s is quickly becoming essential to IT departments as they move towards containerized applications and microservices.

Docker and containerized applications have become extremely popular in enterprise computing and data science over the last few years. Docker has not only enabled powerful new DevOps processes, but it has also elegantly solved the problem of environment management.

Kubernetes helps you manage containerized applications (e.g., apps on Docker) across a group of machines. K8s is like an operating system for your data center, abstracting away the underlying hardware behind its API.

Kubernetes Architecture

Source: Gruntwork

Kubernetes is becoming the platform infrastructure layer for the cloud. Before K8s, when you had to deploy multiple virtual machines (VMs), you might have had arbitrary load balancers, networks, different operating systems, and so on. There was so much variation, even within a single cloud service, that you couldn’t take a distributed application and make it truly portable. Kubernetes, on the other hand, can be used as a consistently programmable infrastructure. Kubernetes makes networking, load balancing, resource management, and more deployment considerations consistent. Kubernetes can handle deployments on any substrate, be it virtual machines or bare metal.

Just like various vendors offer distributions of the Linux kernel, there are different flavors of Kubernetes distributions that include container tools. A consideration for companies making the move to Kubernetes is to figure out which flavor of Kubernetes to adopt and how to deploy it. For on-premises deployments, OpenShift, Rancher, and Tanzu (formerly Pivotal Container Service before the VMware acquisition) are popular flavors. If you already favor a specific cloud provider, using your cloud provider’s Managed Kubernetes service is typically the easiest deployment option. All the major cloud providers support their own flavor of Managed Kubernetes, like EKS at Amazon, GKE at Google, and AKS at Microsoft.

Kubernetes brings a whole new set of benefits to containerized applications, including efficiency and resource utilization. K8s allows data scientists scalable access to CPUs and GPUs that automatically increases when the computation requires a burst of activity and scales back down when finished. This is a tremendous asset, especially in the cloud, where costs are based on the resources consumed. Scaling a cluster up or down is quick and easy because it’s a matter of just adding or removing virtual machines to/from the cluster. This dynamic resource utilization is especially beneficial for data science workloads, as the demand for high-powered CPUs, GPUs and RAM can be extremely intensive when training models or engineering features, but then the demand can scale down again very quickly.

Summary