Model Drift

What is Model Drift?

Model drift is the decay of models' predictive power as a result of the changes in real world environments. It is caused due to a variety of reasons including changes in the digital environment and ensuing changes in relationship between variables. There are two types of model drift,

- Concept Drift: Is a type of model drift where the properties of the dependent variable change. The function which modeled the relationship between features and the dependent variable is no longer suitable for the environment. For example, the definition of a spam email has evolved over time.

- Data Drift: It is the type of model drift where the underlying distributions of the features have changed over time. This can happen due to many causes, such as seasonal behavior or change in the underlying population. Change in feature values due to the pandemic is an example of data drift.

Why do models experience drift?

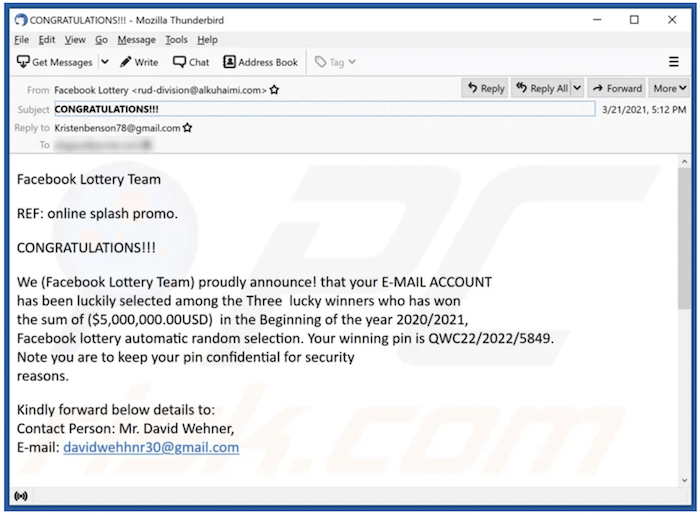

Let’s understand this using a real-world scenario. Natural language processing algorithms have been used for spam filtering since their introduction. They have been used to classify emails as spam and non-spam to defend users from different types of attacks from spammers. Here is an example of an older spam email.

Models learn features such as a "very high amount," "lottery,” etc. to identify this kind of spam. But through the years spammers have improved and have introduced a lot of new spamming methods, which the model hasn’t seen before. This leads to the degradation of model performance. For example,

A model trained with data collected five or ten years ago can’t perform well against such new forms of spam. Here, one of the basic assumptions of machine learning has been violated. That is that future data will have similar distribution as the training data. It is necessary to identify these changes and make updates to models.

How do you detect model drift?

Models and data distributions should be continuously monitored to detect model drift. Some popular methods to quantify model drift are shown below.

- Continuous Evaluation: In this method, the model performance is validated after every n number of days using newly labeled test data drawn from a recent time period.The model drift can be understood by comparing the performance of the model in the newly drawn test data with older ones.

- Population Stability Index (PSI): It is a metric to quantify how much a variable has changed in distribution between two samples drawn at different times.PSI for features are calculated to identify drift. If this value is less than 0.1 it indicates very slight change, a value between 0.1 to 0.2 indicates some minor change and a value greater than 0.2 indicates significant change in the distribution.

- Z-Score: The feature distribution of training data and live data can be compared using z-score. A z-score of +/- 3 indicates that the data might have changed.

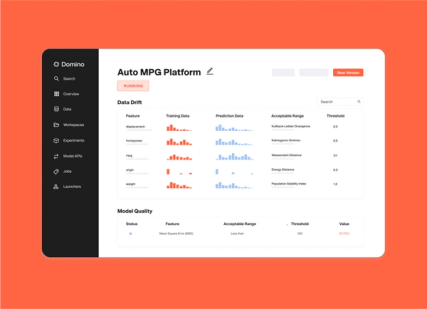

Drift detection with Domino Integrated Model Monitoring

Setting up pipelines and thresholds to detect model drift in production can be a tedious job. Domino’s integrated model monitoring tool helps you detect model drift for operationalized models. Domino will automatically alert you when drift, divergence, and data quality checks exceed thresholds. It's easy to drill down to model features to modify, retrain, and redeploy models quickly. Integrated model monitoring streamlines monitoring setup with simplified configuration and reproducible development environments, designed to save time when diagnosing, rebuilding and redeploying models.