Interpretability

What is interpretability in machine learning?

Interpretable machine learning means humans can capture relevant knowledge from a model concerning relationships either contained in data or learned by the model. Machine learning algorithms have historically been “black boxes”, that provided no way to understand their inner processes, and made it difficult to explain resulting insights to regulatory agencies and stakeholders.

Some models, such as those that use linear regression, are highly transparent and interpretable. But other increasingly popular models, such as ones based on deep learning algorithms, have many “hidden layers,” so interpretability gets more and more difficult.

Interpretability is about the extent to which a cause and effect can be observed within a system. Or to put it another way, it is the extent to which you are able to predict what is going to happen, given a change in input or algorithmic parameters. It’s being able to understand which inputs are the most predictive (i.e., impact the prediction/output the most), and anticipate how predictions will change with differing inputs.

Interpretability should not be confused with “explainability.” Explainability is the extent to which the internal mechanics of a machine or deep learning system can be explained in human terms. It’s easy to miss the subtle difference with interpretability, but consider it like this: interpretability is about being able to discern the mechanics without necessarily knowing why. Explainability is being able to quite literally explain what is happening.

Not all machine learning systems require interpretability. Interpretability is not required when there are no significant consequences for unacceptable results, or when the machine learning is validated and trusted in real applications. Interpretability is needed when these conditions are not met, and questions about bias, trust, safety, ethics, and mismatched objectives arise.

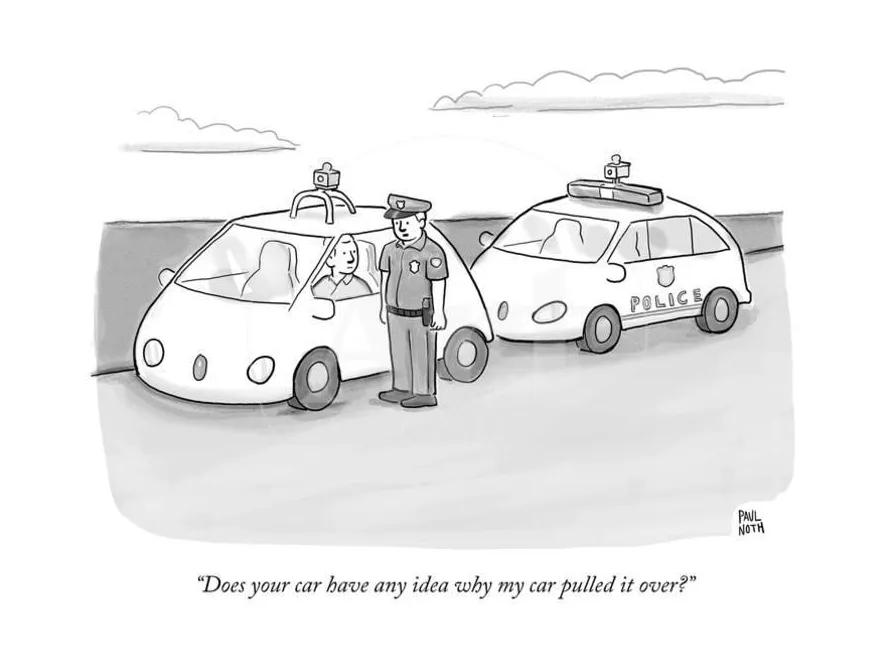

Policing (and interpretability) in the future

Source: Paul Noth